paulinventome

Members-

Posts

162 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by paulinventome

-

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

It's not something i've noticed so far but will give it a go tomorrow. What frame rate and bit depth? It could be related to that rather than the camera. How about shutter speed? I thought i could see some pulsing from the lights but you say you see the same in daylight? And which ISO is causing the biggest issue for you? cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Do you agree that the fp was not in focus? (like camera 9)? If we make the assumption that the fp is using the IMX410 sensor, as is the A7III, Nikon and perhaps the Panasonic then we can see that there is no way that sensor can deliver more than 12 bits in motion. Although there is a crop mode at 14 bits. If you look at the specs for all these other cameras then you can see that 12 bits in motion is pretty much a universal given - Canon say the same for their sensors too. So the container from the sensor to the camera is a fixed 12 bit bucket. If we also assume that all sensors are linear (physics and hardware wise this is the case) so the native signal off the sensor is going to be linear for all of these. Now: either the sensor response in some cases is non-linear (it's possible but i don't think it is the case) or each of these companies is doing something to get these claimed stops beyond 12. In the case of this Sony sensor, is it logarithmically compressing 14 bits down to 12? I think not, there may be a bit of non linearity in the results (there are) but i think that's a function of the sensor itself. In my testing the difference between stills and cine shows that. But i think that goes for any of these sensors. The latest BMD don't output DNGs anymore? But the old ones compressed 12 bit linear into a 10 bit container with a 1D LUT lookup table (great approach) but the source was no more than 12 bit) What other cameras currently output DNG? Because it is very easy to look inside those at the RAW data. And IMHO some of these companies are using techniques like highlight reconstruction to deliver > 12 stops. If you understand that each of the RGB filters has a different sensitivity then you can see how you can take advantage of that and extend the overall range beyond that 12 bit fixed linear container. With the fp, and being RAW, it is up to you to do that reconstruction work. With other systems that are outputting their own RAW then that work can happen automagically internally. It is true that the reconstruction work is as simple as checking a box in resolve, or as complicated as doing the math yourself. Applications like Lightroom do it whether you want it to or not - it's fundamentally part of the lightroom debayer for stills as well. So the irony is a lot of skies done through lightroom are reconstructed highlights... (ever noticed that the blues can be somewhat off?) The only time this doesn't count is when you have a cinema camera style sensor which is designed for greater bandwidth inside the sensor itself, 14, 16+ bits are common. So IMHO i think the fp has a similar range as the other (prosumer) cameras but the data you get off the sensor is 'RAWer' and it's up to you to make the best of it. This is my assumption. I have no insight into what the manufacturers are doing. I know i sound like a fanboy, i'm not really, my fp is a stop gap until Komodo ships. But i do think it's a wonderful little camera! I like what sigma are doing and they don't have any cinema camera lines to protect. I think they're really well positioned to do something quite special in this market segment and i'd hate for anyone thinking about getting an fp to not do it because of these kinds of conversations! cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

I dunno, i think it unlikely - i doubt any more processing was done than the barest min. It would be fairer that way. Just guessing tho! I suspect the nights weren't super high ISO, could have been pretty bright there cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Okay, i downloaded the 'source' file and looked through it. IMHO the sigma stuff is a touch out of focus. If you compare to the under test you'll see the chart is sharper there. The normal studio test feels the focus is forward (his watch is in better focus) Even at f2.8 on full frame the DOF is pretty shallow. If you're not running the camera out to an external monitor critical focus is difficult. Number 9 is well out of focus as well on the chart and the suggestion is that's 8K red. Im not saying for a moment that the fp is in the same league as the others (my red is 10 times the cost) or that the scaling couldn't be better. But it isn't that bad. I've shot full frame UHD and can be obsessive about things and i have no issue with the footage in real life. Yes, the scaling could be better and hopefully it will be. But if you want pixel for pixel sharpness then use DC mode. You have the choice. I'd rather people petition sigma to push this forward - more crop choices, better scaling and so on. The sensor is there, the camera is capable but i feel sigma need to know this is what we want... Cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Pretty sure it's 4. But got to argue a bit here. What did you view, the vimeo or the download? I'm just downloading the source now to have a look because viewing through compression at typical HD sizes i doubt you'd see a difference. You may have been watching the UHD 1:1 version though. Of course don't forget it you don't want any scaling then DC mode gives you 1:1 sharpness for an APS-C effect. Try it. The log profile comment is out of place - there's no native log with any of these sensors. So i don't really understand what you mean here? The fp has a more limited dynamic range than the others, like most prosumer stuff it's capped at 12 bit off the sensor (canon, lumix, nikon all the same). Whereas the bigger cameras, are different beasts entirely. What we're seeing is that as the baseline improves across the board they're all similar capable in most scenarios. What takes the cinema cameras beyond is the more difficult high range and low light scenarios. 9 is probably 8K Red Helium, and that kind of overexposure is what i'm seeing as well. There's at least two stops better than the sigma. But the comment about moire is odd because really i have never seen moire with the Red. And blurriness. Well that's odd. I may ask Evin to see if i can get some DNG samples from the sigma. I don't know how they workflowed it... cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Evin has done a big comparison (see http://www.reduser.net/forum/showthread.php?180359-Full-Frame-Cinema-Shootout-2020 if i'm allowed to post this?) He included the fp for curiosity, old firmware though. The results are nice, bearing in mind you're competing with Alexa Mini LF et al. Shows that hand held the sigma is too light but IMHO it does pretty well bearing in mind it's a tiny fraction of the cost. I'm not 100% convinced post was done the best it can be, but there you go. I was going to check out the dynamic range as i've just about sorted my chip chart out. It reckon it's 12 stops. The sensor output is pretty linear. I know sigma are working on firmware update so i hope it's not too far away - they promise they *are* listening! cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

I've just found photoshop on my laptop so used that and yes, the UHD DC crop is a pixel for pixel crop from the centre of the sensor. You can A/B stills and cine and it's identical. So in this mode with no OLPF it would be interesting to see how much moire there is in motion. I could guess that perhaps the DC HD mode would be pretty good too. The full frame HD mode not so much but there's a even scaling for HD. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

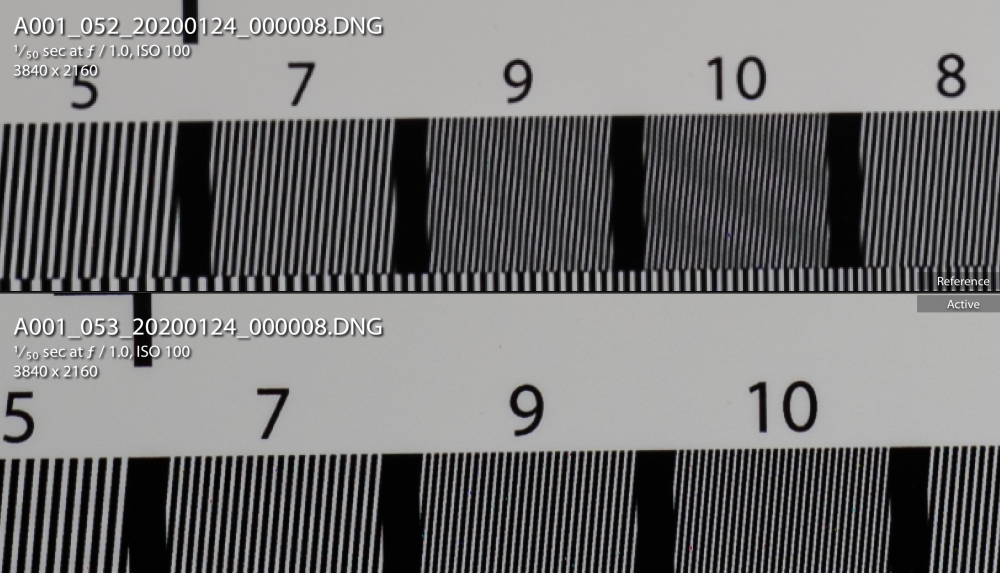

Second attempt as i had uploaded the wrong images before. So long short short, i was testing resolution of 6k vs FF UHD to check the level of aliasing. The only chart i had was an old one i'd printed off myself many moons ago but should be enough to create issues (but i really need to find a better way...) But i can clearly see 6K still vs scaled UHD but what i found interesting is if you switch to DC mode i am pretty sure you're just getting a 1:1 crop of the sensor in UHD - it looks similar to the 6K. Either way the DC UHD RAW mode is much clearer than the FF. But i need to do some better tests but try it yourself and see what you think. cheers Paul So top is full frame UHD and lower is DC UHD, see how much clearer the lines are -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Turning the screen brightness from Auto appears to have made M mode slightly more reliable as @BTM_Pix suggests. So fixed shutter speed and fixed ISO and ramping through manual apertures appears to work better. It sill animates between brightness levels which i total don't understand why. What i do notice though is in low exposures in stills mode the brightness flickers randomly. Does everyone see this? I have reported that as well cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Yeah, one thing i've noticed is that if you have it set to X iso and Y shutter and then dial the physical aperture on the lens the preview lightens then slowly darkens. You can see the waveform doing it - like the screen tries to brighten then realises it's M mode and animates to the new display. It's odd because i cannot understand why anyone would code it like that. With an animation as well Odd, i've not noticed that and i don't think the LCD is high enough res to show any noise. Is this all DNG or JPEG? cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

But if you compress the transport stream - like gzip or similar - that's not RAW compression, right? The RAW is still uncompressed. The Red patent is all about compressing the RAW before saving it out - i doubt you can patent compressing a transport stream - after all http2 is compressed like this too... cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Yes, you're right of course i think on a practical level in those cases it's easier to take the hit for not being 100% physically accurate as a scale is usually pre processing before doing linear comp work (or after). It's nice to run energy conserving convolution filters of lens aberrations over CG footage, works really well, better than blurs. Yes, these are the kinds of thought experiments i'm trying to wrap my head around. In the case of the edge case (ha pun intended!) even with the full RGGB bayer info would that situation be much different - if the missing pixel between the R and the R is a green - the missing red would still be interpolated. You can of course argue that the luma of the G in that position would be the basis for that interpolation and therefore more accurate. If you scaled the channels down first then output that RGR edge - it would be softened - in your example that applies if you scale up, you are adding data. But scaling down you are removing and averaging. So the thought experiment is what is the best scale to do here - is it nearest neighbour or some kind of non sharpening scale as i can see edge issues with sharpening. I must assume sigma has tested this if it is in fact what they're doing. I must also go back to the basic question i wonder if there's anyway just to get 6K out of this thing. Do we know which specific USB version it's running and what the data rate would be? Also isn't the Red patent to do with lossy compression? If you just do run length encoding on the stream does that not work? I think sigma should open source the OS So i've seen a case with the stills doing odd things with exposure, when switching between cine and stills and i am trying to match for testing. But after a reset it stopped. So i reported a bug but wasn't able to give steps to reproduce. I got caught by having the camera in S mode with a manual lens yesterday - what's annoying is the screen is compensating. So i assume that the camera thinks there is a lens where it can adjust aperture thinks it's stopped the lens down for example and compensates on the LCD but the actual shot is exposed totally wrong. So the M mode is essential. But it makes me wonder *why* the screen isn't showing an accurate preview of the exposure (and i had the setting on) So there are some bugs. I've not been massively affected by a shutter lag though. Does it help if you turn off screen black out and also turn on the low previews mode? cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

The only part of that i would have issues doing is writing out a DNG, i can do the rest. Unless i can make my own debayer in Nuke but ideally we'd want to match like for like. I suspect @cpc might be able to write out DNGs though... cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Beg to differ on this one ? If you have a high contrast edge and use various scaling algorithms, Lanczon, Sinc, etc,. Often the scale results in negative lobes (like a curve overshoot). It's quite easy to see this. Happens in compositing all the time. It's exacerbated by working Linear as we always do (i suspect because of the dynamic range). So it's a well established trick to cover to log, scale, and convert back to linear. I suspect some apps do this automatically (maybe resolve does, i don't know) https://www.sciencedirect.com/topics/computer-science/ringing-artifact It's a bit geeky but there's a diagram of a square pulse and lobes that extend beyond it. Sony had this issue in camera on the FS700, very bright edges would give black pixels as the scale went negative. My gut tells me in the case of a bayer sensor it could be even worse - partly because of the negative signal but also the tendancy to have stronger edges because of missing bits of image? Kindest Paul Cool. But are they not just taking each RGGB into a separate greyscale image, so you have 4 grey images roughly 3000x1250. Scale those to 1920x1080. Then create bayer data for 3840x2160 by just alternating pixels to build up the RGGB again? So we are maybe seeing artefacts in the scale process based on algorithm used. It might even be nearest neighbour which is what i think you're demonstrating - when you take an existing pixel and alternate to make up the UHD image. But if that scale is done properly - is it not going to improve the image OR is the fact that scaling those 4 layers, which are already missing pixels in-between actually makes it worse? The Ideal is take each RGGB layer then do an interpolation, so that instead of going from 3K (one channel) you interpolate that up to the full 6K by interpolating the missing pixels and *then* scale it down to to 1920 and then the resulting bayer image might actually look really good....? cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Some interesting experiments @rawshooter and @cpc Sigma has said that the 6k image is scaled to UHD. Not binned or skipped. The reasoning is that by scaling then the lack of OLPF can be mitigated a bit. I think what we might be seeing and saying is that the scaling algorithm used could be improved. There are all sorts of issues sharpening in scaling - you get negative lobes around edges which can result in black (NaN) pixels (Sony had some issues with this). I'm wondering if the black pixels in the resultant image were just clipped but actually in these images the raw data is doing odd things. The worst colourspace to scale in is Linear which is the sensors native space. In Nuke often we'd change an image to log, scale and then back to linear. The question is naively i am assuming each of the RGGB layers is scaled, then written out in bayer format - does that sound likely? Does that work okay with bayer reconstruction? One of my wish lists to sigma is more choices over the image size - 2:1 at the same data rates would be useful. If they are taking the whole 6K image (and no reason to say they're not) then i wonder whether the camera can actually dump out the data fast enough to SSD. I believe sigma are obviously keen to keep the SDXC card as a main source but just getting *all* the raw data would be useful. Could you elaborate on how you think the scale is done? cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

I think the Rs are good for photography because they tend to show two different renders wide open (glowing) and stopped down, especially the Mandler designed ones (the lux's you quote). I found the 90 summicron very poor with flare, almost unusable. The 24 is not a Leica design and it shows. And the 28mm f2.8 Mark II is excellent. The last version of the 19 pretty good too, much better than the contax 18. Yes, i have focus gears for the M lenses. Studio AFS make some aluminium gears that you can twist on, like the Zeiss gears but they do them with scalloped inserts that will hold around the smaller barrels of the Ms. The Zeiss versions don't go small enough. They're really good and easy to twist on and off when needed. The issue with the Ms are cost. I'm heading for a 90 APO and the latest 28 summicron. But i may have the sell the first born. But actually you get what you pay for (up to a certain point) I don't find Resolves debayer very good. I am trying to get some fixes to Nuke that will allow these DNGs to go through there. I don't see there being any reason why we should see coloured pixels if the algorithm understands what the content is, right? I mean it's all recreated - so why false colours? In this case i believe this artefacts look like the AHD(?) algorithm. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

I've had a bunch of contax in the past. I think we all go through phases! I had that 28-70 Contax and it's a nice lens. I don't believe it was designed by Zeiss, think it was a Minolta job. But ergonomically it was a lot better than the push pull focus ones. The problem with those is that the zoom often creeps if you're not shooting horizontal! I was using it on APS-C so full frame would actually be better for it. IMHO after going through Zuiko, Leica R, Canon FD and russian Lomos, the contax are the best of the bunch in terms of older lenses. (Yes, they are better than Leica R with the exception of one or two very special R lenses). But what i did notice is that variation between samples was quite large as perhaps can be expected from used lenses. I actually had 4 versions of the Contax 50mm f1.4 at one point and one of them was stunning and the others were okay. (I still have it actually) I also have a lot of voightlanders which are a fantastic match for the sigma fp. Even so i had some issues with some of those and finally ended up with my first proper Leica M. I wasn't sure how much of the M lenses is just ranting and confirmation bias because it's a Leica (i really didn't gel with the R lenses). But actually the 50mm summicron is a stunning lens. And now finally i'm getting round to selling as much as i can and just focusing on a few of the M lenses. Perhaps all paths lead there! I think this is known about? I've reported it and also flickering in the EVF at times. especially low light. However i've not noticed any in footage (yet) cheers Paul Okay, i had a look at the video now. As we know, you aren't using an fp for anything other than RAW, there are much better choices on the market. So i think we can ignore any tests with MOV formats. I may try the resolution stuff myself when i have a moment - my feeling is that these are debayering artefacts, i think it unlikely they are introduced errors from the camera. I do think that how you debayer makes a lot of difference - different algorithms for different uses. The flickering is not in his video is it? But yours? Do you mean flickering of exposure or the flickering of fine detail as it moves from pixel to pixel? Up to a certain point i think this could be lack of OLPF here... cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

That's my thinking. AFAIK highlight reconstruction happens best in XYZ space and i'd read that the BRAW files are white balanced. But that was something i'd read and have no idea whether it's true or not. It's difficult to know whether this is RAW or not - the ability to debayer according to new future algorithms and no white balance baked in really seems RAW to me. I don't quite understand the benefit of raw processing in camera vs a log file out of a camera... cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

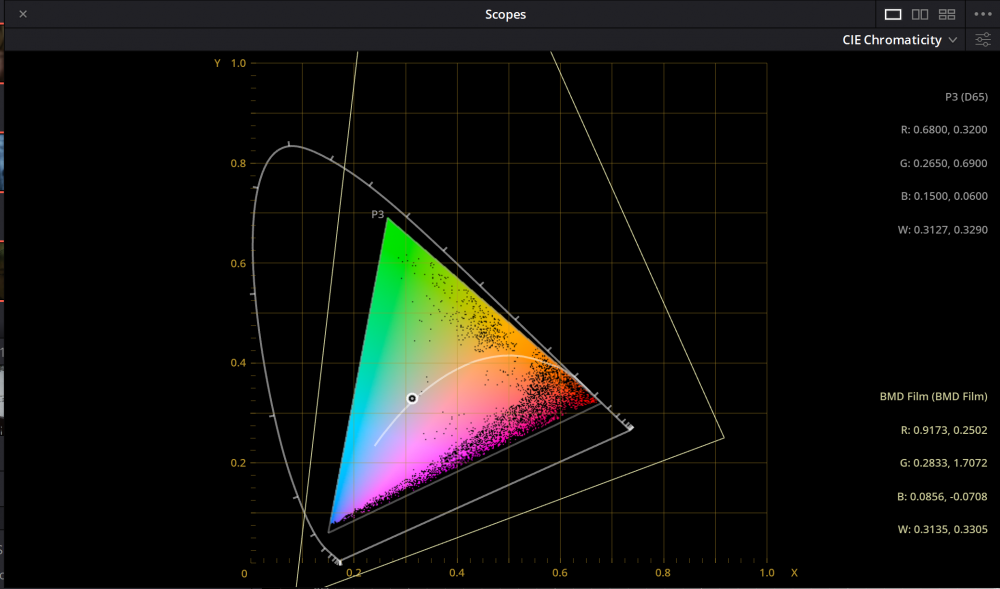

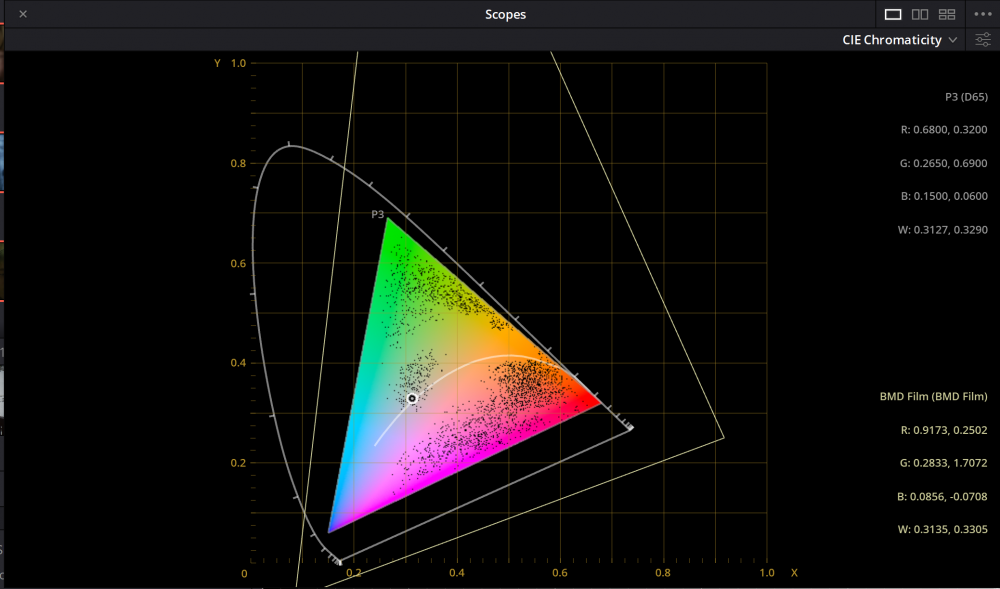

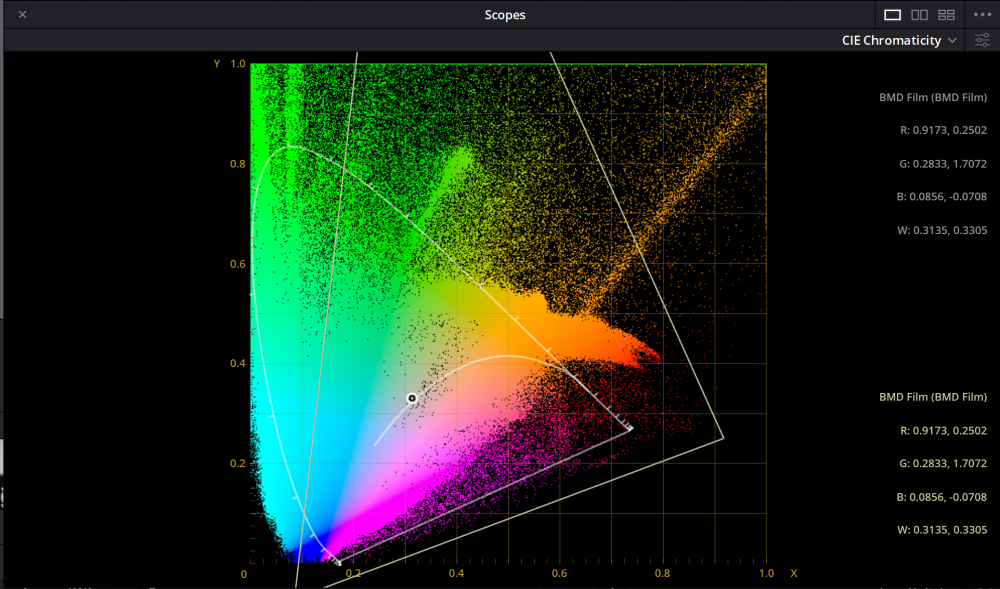

Thanks for Clarifying officially! If you actually do this then the settings are Color Space: Blackmagic Design, Gamma: Blackmagic Design Film. The gamma curve brings all the range in and the colourspace is BMD. What does that actually mean though, you say that it won't touch gamut? To try and understand i use the CIE diagram scope. And setting the DNG to P3 vs BMD Film 1 (in a P3 timeline) i see the first two diagrams. First is BMD Film into a P3 Timeline and the second is debayering P3 into a P3 timeline. Now this is basically a point of confusion in Resolve for me - to understand what resolve is actually doing in this case. A saturated Red in sensor space in the DNG is transformed into 709/P3/BMD Film - but is that saturated Red actually mapped into the target space or is it placed at the right point. I cannot work it out. So for example if that sensor Red is outside of 709 space then IMHO Resolve could either clip the values or remap them. But if you switched to P3 and that Red lay within it then no mapping has to happen. But on the CIE diagram i do not see this. I would expect to switch between 709 and P3 and the colours inside the triangles not move. In other words in a sufficiently large colourspace on that CIE diagram i ought to be able to see what the camera space is (ish). So when i do this and choose BMD Film and set the timeline to that as well i get the 3rd diagram - which is beyond visible colours so i have to assume i just don't understand what Resolve is doing here or that CIE diagram is not working? Any light shed (pun intended) would be super nice! cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

@rawshooter Excellent work, thank you. I don't know very much about BRAW and workflows. One thing i am aware of is that quite a few cameras (usually not doing RAW) will do their own highlight processing in camera before spitting out an image. It's nigh impossible to get an R3D processed via IPP2 without it in because it's also a valid method of reconstructing an image (just as filling in missing pixel values). So the check would be are those BRAW files actually just raw data from the camera or do they have any camera processing in them? Then an important part of range is the colour quality and so it's quite usual for people to shoot colourful scenes or charts and over and over expose them, bring them back to a standard exposure and see how the colours are - in day to day shooting this can be important. To do this i personally tend to use shutter speed, not aperture as lenses aren't that accurate (not sure shutter speed is either but think it's more likely) I do have a step wedge here somewhere that i would like to shoot some range charts but have mislaid it! I reckon the fp is doing around 12 stops and it's fairly clean in the shadows so it's quite a usable range. As i said before there is a sensor mode that does 14 bit out but don't think that's being used anywhere. One thing with the fp is that it's easy to compare stills to cine. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Personally i don't tend to use gimbals. A lot of what i do can be monopod. I have a carbon fibre one with what's like a gel cushion on top that allows me to level the camera easily. Of course moving is a different case and i have some edlekrone slider/heads which work fairly well. I have some bigger ones too but for the fp they're overkill. However one day i'll get one, if for no other reason than they'd make a nice motorised head. If the software works well cheers Paul insta: paul.inventome -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

IMHO you generally shouldn't run a gimbal anywhere near its payload capacity. I'd have thought the S would be perfect? Would you be loading up the fp? wireless follow focus? cage? power? cheers Paul insta: paul.inventome -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

That's very kind of you, thank you. Post is minimal at the moment however i think one 'trick' is that i have this as a Resolve project which is YRGB Color Managed, my timeline is 709, output colourspace is 709 but my Timeline to Output Gamut Mapping is RED IPP2, with medium and medium. (Input colourspace is RedWideGamut but AFAIK when dealing with RAW and DNGs this is ignored) This is because most of the project is Red based. BUT the sigma fp footage is debayering into 709 (So in Camera RAW for DNG is it Colourspace 709 and Gamma 709 with highlight recovery by default). What happens is that the DNGs are debayered correctly, with full data. But that IPP2 mapping is handling the contrast and highlight rolloff for my project as a whole, including the DNGs. IMHO i do this all the time with various footage, not least because it's easier to match different cameras but mostly because that IPP2 mapping is really nice. Whilst i'm sure you can massage your highlights to roll off softly, it makes more sense for me to push footage through the same pipeline. Take some footage and try. When you push the exposure underneath IPP2 mapping the results look natural and the colours and saturation exposes 'properly' Turn it off and then you're in the land of saturated highlights and all sorts of oddness that you have to deal with manually. This is not a fault of the footage but the workflow. Running any baked codec makes this more difficult - the success of this approach is based on the source being linear and natural. As i say the fp is like an old cine camera, the bells and whistles are minimal but if you're happy manual everything i think it can produce lovely images and it's so quick to pull out of a bag. If the above doesn't make sense let me know and i'll try to put together a sample. Cheers Paul insta: paul.inventome -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

So i said i'd post some stills, these are basically ungraded. This frame is in a sequence with car lights, i like the tonality of this very subdued moment. Shot 12bit to manage shadow tonality. From a different point above. All shot on a 50mm M Summicron probably wide open. I think i hit the saturation slider here in Resolve. But this had car rolling over camera. It's a 21mm CV lens and i see some CA aberrations from the lens that i would deal with in post. But i'd never let a car run over a Red! shot on an 85mm APO off a monopod. Nice tonality again and it's day light from windows with some small panel lights bouncing and filling in A reverse of the above. Some fun shots. I think the true benefit of something like the fp is the speed at which you can see something and grab it. Using it just with an SSD plugged in and manual M lenses gives a more spontaneous feel. Now most of the film will be shot on Red, in controlled conditions with a crew and that's the right approach for multiple dialogue scenes and careful blocking. But the fp has it's place and i may hand it too someone and just say grab stuff. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

The BM Film setting is specifically designed for BMD sensor response. I believe someone from BMD also confirmed this. The fp sensor is not the same and making assumptions about that response will lead to colour errors - albeit perhaps minor. Depending on project (709 vs P3) i have been debayering into the target colourspace directly, which AFAIK uses the matrixes inside the DNG files to handle the colour according to sigmas own settings. So if i am on a P3 project i would debayer directly into P3. There is also an argument to say that you should *always* debayer into P3 because it is a larger space and then you have the flexibility to then massage the image into 709 the way you want to - this is really about bright saturated lights and out of 709 gamut colours - which the sensor can see and record, especially reds. In Resolve my timeline is set to 709/P3 as the grading controls are really designed for 709. In the camera RAW setting for each clip i would massage the exposure/shadows/highlights to taste. I see no purpose into going to a log image when the RAW is linear. If you need to apply a LUT that is expecting a log image then you can actually change a single node to do that. IMHO of course, your own needs could be different! Grading RAW is dead easy Cheers Paul There is something going on here for sure - i sometimes see the screen vary in front of me without doing anything. There have been stills that are more underexposed than i expected and i reported a bug to them about the stills preview being brighter than cine for the same settings but i couldn't reproduce it. So yeah, there is a bug somewhere! cheers Paul