paulinventome

Members-

Posts

162 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by paulinventome

-

Sigma fp and EVF-11 for other cameras... Could be possible

paulinventome replied to paulinventome's topic in Cameras

Good thoughts! I spoke to Sigma who said it wasn't possible, Whether that's true or not i don't know - i must assume there are handshakes going on because there is a switch on the EVF for flipping between LCD and EVF and that has to pass back into the camera somehow. I could rig something for power (you are right, that has to be power source).. I have extension cables for USB and HDMI, so if i power it then it should work as is - then swap the HDMI signal and see if that works... Despite what sigma says it is still tempting as officially i doubt they could say it would work (it was worth an ask) There's a new Leica M11 EVF as well... Hmmmm cheers Paul -

Sigma fp and EVF-11 for other cameras... Could be possible

paulinventome replied to paulinventome's topic in Cameras

I did, it's still quite big. I have a Graticule Eye and the OEYE is quite a bit longer. At the moment i'm using Hassleblad OVF Prisms on the Komodo screen itself, which is 1440px across anyway. Very easy but limited angles. I may speak to portkeys actually, they just released an SDI version of their mini screen, which in someways is more compact thanks Paul -

So i just got an EVF-11 for the sigma fp and it's a really nice small lightweight EVF. It uses the HDMI out, USB C and there are two prongs that i believe connect to the flash output on the side of the sigma fp I want to see if i can use this for my Komodo, which is crying out for a small EVF (The Zacuto stuff is too large, i have an Eye already and would happily get rid of it) So first experiment was to get some cables to plug the Komodo into the HDMI (via an SDI convertor) and then plug the USB C from the camera to the EVF - thinking that maybe that would be enough to start with. But no go. Then i saw the two prongs of the flash and wondering whether they were power and the USB C connection was control. So anyone got any details on the pins for the flash on the side, or has anyone experimented? I'm just trying a proof of concept to see if it's possible, the EVF might be relying on control from the camera over that USB C in which case all bets are off... cheers Paul

-

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

I think that's pretty unfair without knowing what we do. Most of my work is P3 based. Ironically the vast majority of apple devices are P3 screens, there's lots of work targeting those screens. 2020 is taking hold in TVs and is becoming more valid and of course HDR is not sRGB. Any VFX work is not done in sRGB. Any professional workflow is not sRGB in my experience. All we're doing is taking quarantine time and being geeks about our cameras. Just like to know right. My main camera is a Red, not a sigma fp, but the sigma is really nice for what is it. It's just some fun and along the way we all learn some new things (i hope!) cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

I'm with you on all this but i don't know what you need sigma to supply though... The two matrixes inside each DNG handle sensor to XYZ conversion for two illuminants. The resulting XYZ colours are not really bound by a colourspace as such but clearly only those colours the sensor sees will be recorded. So the edges will not be straight, there's no colour gamut as such, just a spectral response. But the issue (if there is one) is Resolve taking the XYZ and *then* turning it into a bound colourspace, 709. And really i still can't quite work out whether Resolve will only turn DNGs into 709 or not. So as you say, for film people, P3 is way more important than 709. And i am not 100% convinced the Resolve workflow is opening up that. But i might be wrong as it's proving quite difficult to test (those CIE scopes do appear to be a bit all over the place, and i don't think are reliable). As i mentioned before i would normal Nuke it but at the moment the sigma DNGs for some reason don't want to open. Now i could be missing gaps here, so i am wondering what sigma should supply? cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Hi Devon, i missed this sorry. Unbound RGB is a floating point version of the colours where they have no upper and lower limits (well infinity or max(float) really). If a colour is described in a finite set of numbers 0...255 then it is bound between those values but you also need the concept of being able to say, what Red is 255? That's where a colourspace comes in, each colourspace defines a different 'Redness'. So P3 has a more saturated Red than 709. There are many mathematical operations that need bounds otherwise the math fails - Divide for example, whereas addition can work on unbound. There's a more in-depth explanation here with pictures too! https://ninedegreesbelow.com/photography/unbounded-srgb-divide-blend-mode.html Hope that helps? cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

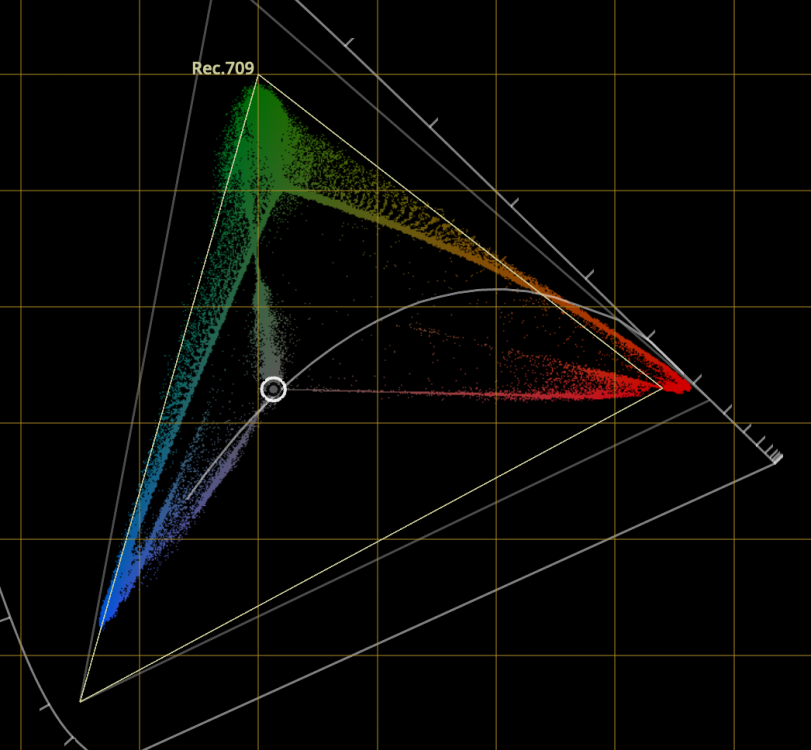

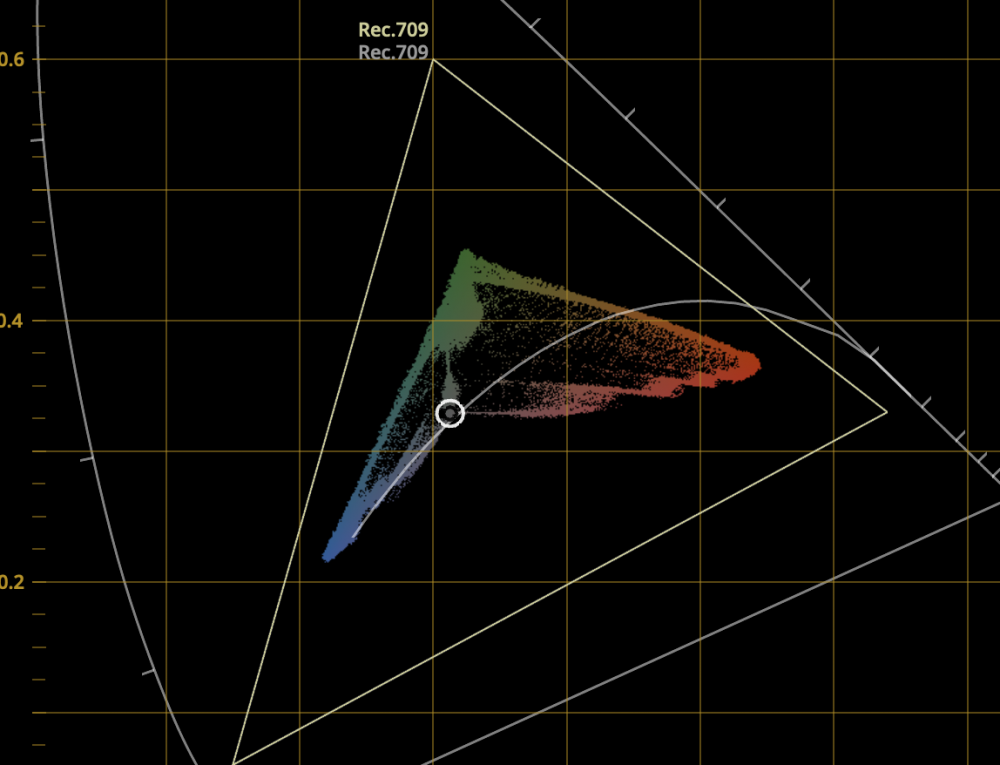

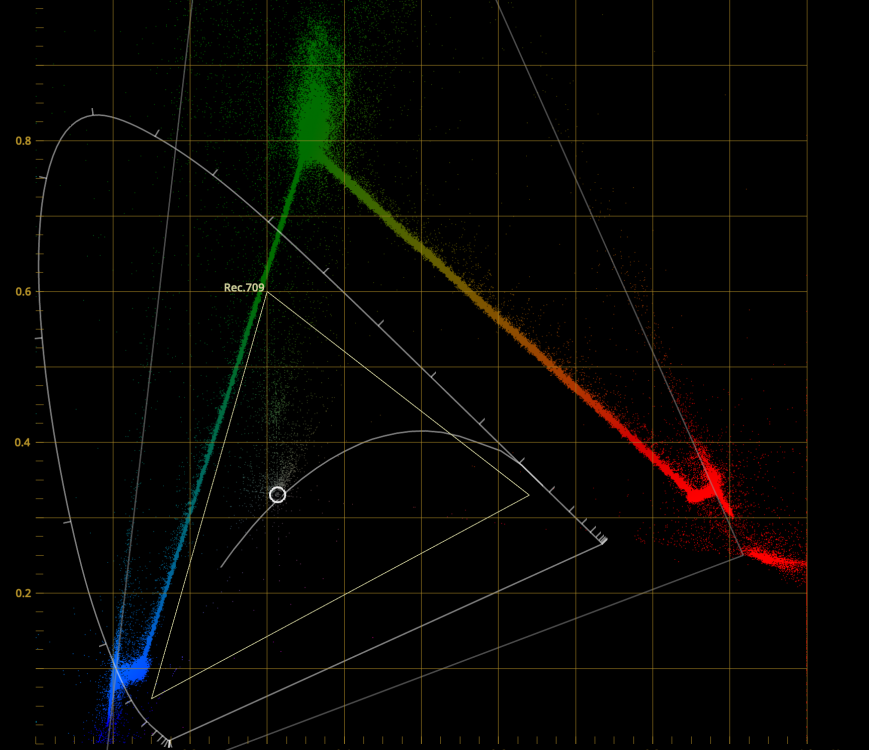

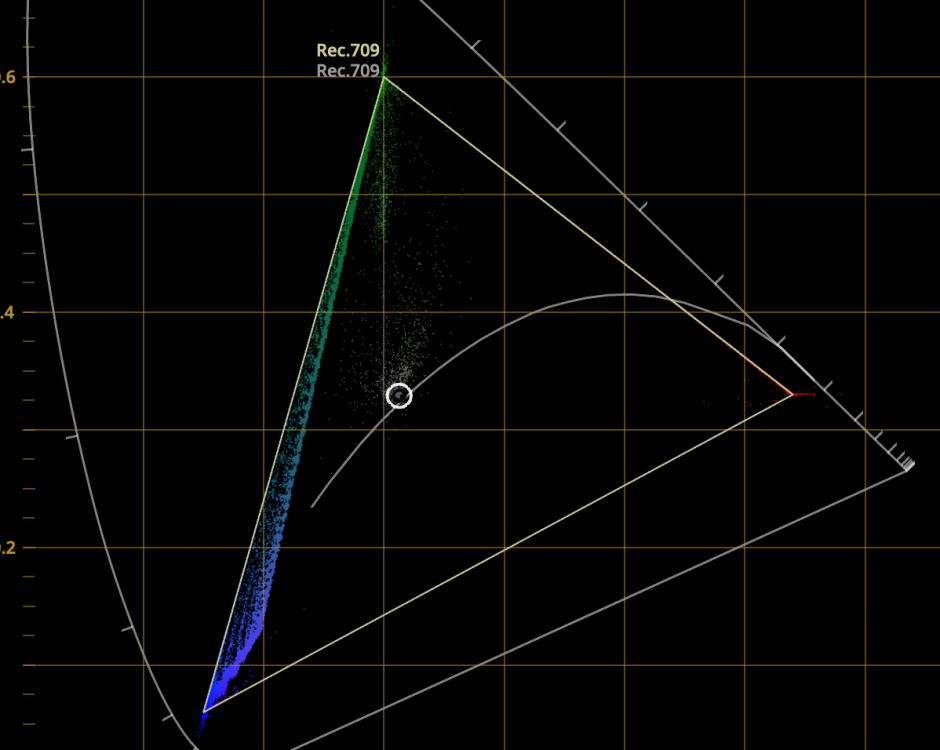

First just to say how much i appreciate you taking the time to answer these esoteric questions! So i have a DNG which is a macbeth shot with a reference P3 display behind showing full saturation RG and B. So in these scene i hope i have pushed the sensor beyond 709 as a test. Resolve is set to Davinci YRGB and not colour managed so i am now able to change the Camera RAW settings. I set the color space to Black Magic Design and Gamma is Black Magic Design Film. To start with my timeline is set to P3 and i am using the CIE scopes and the first image i enclosed shows what i see. Firstly i can see the response of the camera going beyond a primary triangle. So this is good. As you say the spectral response is not a well defined gamut and i think this display shows that. But my choice of timeline is P3. And it looks like that is working with the colours as they're hovering around 709 but this could be luck. Changing time line to 709 gives a reduced gamut and like wise setting timeline to BMD Film gives an exploded gamut view. So BMD Film is not clipping any colours. The 4th is when i set everything to 709 so those original colours beyond Green and Red appear to be clamp or gamut mapped into 709. So i *think* i am seeing a native gamut beyond 709 in the DNG. But applying the normal DNG route seems to clamp the colours. But i could just be reading these diagrams wrong. Also with a BMDFilm in Camera RAW what should the timeline be set to and should i be converting BMD Film manually into a space? I hope this makes sense, i've a feeling i might have lost the plot on the way... cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

I don't know what the native space of the fp is but we do get RAW from it so hopefully there's no colourspace transform and i want to know i am getting the best out of the camera. And i'm a geek and like to know. I *believe* that Resolve by default appears to be debayering and delivering 709 space and i *think* the sensor native space is greater than that - certainly with Sony sensors i've had in the past it is. There was a whole thing with RAW on the FS700 where 99% of people weren't doing it right and i worked with a colour scientist on a plug in for SpeedGrade that managed to do it right and got stunning images from that combo (IMHO FS700+RAW is still one of the nicest 4K RAW cameras if you do the right thing). The problem with most workflows was that Reds were out of gamut and being contaminated with negative green values in Resolve (this was v11/v12 maybe? I have screenshots of the scopes with negative values showing and so many comparisons! I understand that the matrixes transform from sensor to XYZ and there's no guarantee whether the sensor can see any particular colour at any point and that the sensor is a spectral response not a defined gamut. I also know that if i was able to shine full spectral light on the sensor i could probably record the sensor response. I know someone in Australia that does this for multiple cameras. But within the Resolve ecosystem how do i get it to give me all the colour in a suitable large space so i can see? If i turn off colour management and manually use the gamut in the camera RAW tab Resolve appears to be *scaling* the colour to fit P3 or 709. What i want to see is the scene looking the same in 709 and P3 except where there is a super saturated red (for example) and in P3 i want to see more tonality there. I'm battling Resolve a little because i don't know 100% what it is doing behind the scenes and if i could get the DNGs into Nuke then i am familiar enough with that side to work this out but for some reason the sigma DNGs don't work! thanks! Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

I got a reply from them last week, has it shut down recently? We need to double check we're talking about the same thing. I see half way through that an exposure 'pulse' not a single frame flash but more a pulse. I believe it's an exposure thing in linear space but when viewed like this it affects the shadows much more. Can you post a link to the video direct and maybe enable download so we can frame by frame through it. A mild pulsing is also very common in compression so we want to make sure we're not look at that...? cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Actually you don't really want to work in a huge colourspace because colour math goes wrong and some things are more difficult to do. Extreme examples here: https://ninedegreesbelow.com/photography/unbounded-srgb-as-universal-working-space.html There were even issues with CGI rendering in P3 space as well. You want to work in a space large enough to contain your colour gamut. These days it's probably prudent to work in 2020 or one of the ACES spaces especially designed as a working space. What you say is essentially true. If you are mastering then you'd work in a large space and then do a trim pass for each deliverable - film. digital cinema, tv, youtube etc, You can transform from a large space into each deliverable but if your source uses colours and values beyond your destination then a manual trim is the best approach. You see this more often now with HDR - so a master wide colour space HDR is done and then additional passes are done for SDR etc,. However, this is at the high end. Even in indie cinema the vast majority of grading and delivering is still done in 709 space. We are still a little way off that changing. Bear in mind that the vast majority of cameras are really seeing in 709. P3 is plenty big enough - 2020 IMHO is a bit over the top. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

If you're talking about the shadow flash half way through then yes. At ISO400 only. And yes Sigma are aware of it and i'm awaiting some data as the whether we can guarantee it only happens at 400. This is the same for me. But it's only 400 for me, whereas others have seen it at other ISOs... cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

That would be awesome! Yes, thank you. Sorry i missed this as i was scanning the forum earlier. Much appreciated! Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

And that sensor space is defined by the matrixes in the DNG or it is built into Resolve specifically for BMD Cameras? I did try to shoot a P3 image off my reference monitor, actually an RGB in both P3 and 709 space. The idea is to try various methods to display it to see if the sensor space was larger than 709. Results so far inconclusive! Too many variables that could mess the results up. If you turn off resolve colour management then you can choose the space to debayer into. But if you choose P3 then the image is scaled into P3 - correct? If you choose 709 it is scaled into there. So it seems that all of the options scale to fit the selected space. Can you suggest a workflow that might reveal native gamut? For some reason i cannot get the sigma DNGs into Nuke otherwise i'd be able to confirm there. In my experience of Sony sensors usually the Reds go way beyond 709. So end to end there's a bunch of things to check. One example is on the camera itself whether choosing colourspace alters the matrixes in the DNG - how much pre processing happens to the colour in the camera. Cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Some small compensation for the lock down. I've been taking mine out on our daily exercise outside. It's pretty quiet around here outside as expected. Dogs are getting more walks then they've ever had in their life and must be wondering what's going on... I am probably heading towards the 11672, the latest summicron version. I like the bokeh of it. As you say, getting a used one with a lens this new is difficult. Have fun though - avoid checking for flicker in the shadows and enjoy the cam first!! cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Thanks for doing that! It confirms that the fp is probably similar to the a7s. The f4 is clearly smeared as expected. I'd hoped with no OLPF then it would be closer to an M camera. So it seems that it's the latest versions that i will be looking for... The 28 cron seems spectacular in most ways. 35 is too close to the 50 and i find the bokeh of the 35 a little harsher than the others (of course there are so many versions so difficult to tell sometimes!) Thanks again Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Thank you! Yes, i had most of the voightlanders, from 12mm upwards on various Sonys. They were pretty good, even the 12. The sigma is a bit better with the 12 as well. I was understanding that the 28s in particular were problematic because of the exit pupil distance in the design. It was after viewing the slack article that made me obsess a bit more about it all. I was never happy with the voightlander 1.5 and finally decided to try the summicron. Fell in love with the look and the render. Found them matched my APO cine lenses better. So decided to sell most of the voigtlanders and just focus on 3 Leica lenses, 28, 50 and 90. There's no where i've found to rent in the UK to test so hence asking people! Love the photos. The only issue is that most of my photography may be like that, but i do also need f8 landscapes/architecture and as flat a field as possible. So looking for samples like that too! cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Even calman isn't perfect. The guys at Light Illusion really, really know their stuff and some of the articles on their site can be really useful. I tend to profile a monitor with at 17 cube and then use that as a basis to generate a LUT for different colourspaces. So even on a factory calibrated 709 display (Postium reference monitor) i found that i still needed a LUT to get the calibration the best i could. I even used two different probes. And sanity checked by calibrating an iPad Pro (remarkably good display) matching that and then taking that to the cinema and projecting the test footage with the iPad so i can eyeball. Once i know i have devices where i can be pretty calm about things then that's a good place to start. I work from home and i'm not a dedicated facility so do the best with what i have. One issue with the iDevice displays though is that OLED is an odd creature. You will find that only OLED can create full saturated colours in shadows. So if you watch a profile on, say, 100% Red then as the brightness decreases the red stays in the same chroma coords whereas on pretty much all other display technologies the chroma will desaturate. I mentioned the saturation as a way to eyeball the white balance. push it up and you see colour casts very easily. I found your DNG looked perfectly natural with default settings. As to the question of what is Resolve doing with the fp colours i still don't 100% know. I think it does the same in ACES as DNG. In your case i think you were using an AP1 space and if you saturate in that then you can push the colours to the edge of AP1 which is expected? Especially via a node because the sigma colours start off as a small portion of AP1 but the Resolve node can push those within that colourspace. Working in too big a colourspace is also problematic. The original ACES (AP0?) was too big as a working space as the grading controls would be too heavy handed as they had a huge space to have to work within. The original AP0 was considered an archiving space, not a working one. Math also can work differently. There were some great examples of math failing in unbound RGB and even P3. So going with the biggest is not always the best! I think to see what the camera is doing would be a case of shooting some super saturated colours, beyond pointers gamut (which is the gamut of natural surface colours) and then taking that DNG into Resolve. Doing it as 709, then P3 and comparing whether a) it looks the same save for some parts and b) do the CIE diagrams clearly show colours beyond 709 without any tweaks. I wonder if i shoot an RGB Chart off my P3 reference monitor and see how that fairs? If this is a Sony sensor then i would pretty much guarantee the Red is beyond 709 - this was an issue i had with sony sensors way back with the FS100 and 700 and the a7 series. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

As far as i understand the 1st version of both the Elmarit and the Summicron had huge issues on non M cameras because the filter stacks are too thick and the rear of the lens produced very shallow ray angles. Jono Slack did a comparison with the V1 and V2 summicron on a Sony a7 and the results were very different. The performance of it on the a7 was abysmal before. Again, infinity focused. But the fp has no OLPF and there's no data as to how thick that stack is. I've been after a 28 cron for a while, but the V2 is expensive and elusive whereas there are plenty of V1 around - but there are no tests on an fp. The 50 and 90 are incredible on the fp but i need a wide to go with them. So would be super interested to see how that fairs when you do get your fp. This is less FC and more smearing. More an issue with the camera than the lens. thanks Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

That's really nice of you to say so, i appreciate it. I think in these times of quarantine we can all obsess a bit. Looked at the DNG and ACES. My observations (as i don't generally use ACES) - For DNGs the input transform does nothing and you have no choice over colourspace or gamma in the RAW settings which makes sense. But the question is what is Resolve doing with the data - is it limiting it to a colourspace? Is it P3? Is it 709? Is it native camera? - This ACES version uses a better timeline colourspace. But Resolve really works best (in terms of the secondaries and grading controls) when using something like 709. If you qualify something when you have the timeline set to a log style colourspace you'll find it really difficult. I don't know enough about this set up to know whether Resolves works at its best. I would hope that being a basic setting Resolve would know about it but i made a huge mistake once setting my timeline to RedWideGamut/LogG10 and couldn't work out why everything just didn't work very well! - The outputs are plentiful, and you should match your monitor with what output matches the best. If i switch it on my P3 display between P3 and P3 (709 Limited) then the image doesn't change - therefore in this scenario i believe that resolve is debayering into 709 space from the sigma. - The sigma controls let you boost saturation, the white point of those images is around 9000 as you can push saturation and balance til it's mostly grey. I thought that ACES include a RRT which is a rendering intent - like film emulation - maybe i can't find this in Resolve at the moment. So what i would be interested in is comparing CIE diagrams for this vs other routes to see if the DNGs hold beyond 709 colours. Clearly we are nitpicking at a level that is frankly embarrassing but i'm bored... cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Good stuff on CoD, don't play it but looks pretty. The issue under windows (and Mac to a lesser degree) that there is no OS support even if you can calibrate your monitor. There's nowhere in the chain to put a calibration LUT. So really you're looking at a monitor where you can upload one too or a separate LUT box which you can run a signal through. When you scrape beneath the surface you will be amazed at how much lack of support for what is a basic necessity. For gaming your targets are most likely the monitors you use and so that makes sense. But for projection or broadcast you really have to do the best to standardise because after you there's no way of knowing how screwed up the display chain will be. Especially with multiple vendors and pipelines - essential to standardise. Look at Lightspace, IMHO much better than DisplayCAL. That's what i use to stay on top of displays and devices I will take a look at the DNG This is really worth a watch : cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

In theory, if the camera sees a macbeth chart under a light that it is white balanced for, at a decent exposure and you have the pipeline set up correctly - you should see what you saw in real life. If you decide that the colours are wrong then, that's a creative choice of yours, but the aim is really to get what you saw in real life as a starting point then change things to how you like. Sounds like you may be under windows? One of the problems with grading with a UI on a P3 monitor is that your UI and other colours are going to be super super saturated because the OS doesn't do colour management, doesn't know what monitor you have and therefore when it says Red your monitor will put up NutterSaturatedRed rather than what is recognised as a normal Red. When you are dealing with colours like that your eye will adapt and you will end up over saturating your work. But anyway, you probably know this but it's one of the biggest bug bears with Adobe in the sense that with no colour management it's easy to grade on a consumer monitor who's white point is skewed green/magenta and then you make weird decisions. But i digress... Have you got a single DNG with that chart in? I'd like to take a look and see how it looks. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

which version of the Elmarit - the newest one? Can you do me a favour and let me know what the corners are like at infinity on the fp? cheers! Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

What are you viewing on? If you're viewing on a modern apple device (MacBook, iMacs, iDevices) the P3 is the correct output colourspace for your monitors. If you view 709 on a P3 monitor then it will look overly saturated, conversely if you view P3 on a 709 display then it will look de saturate (the colours will also be off, reds will be more orange and so on) Colour Management is a complicated subject and it can get confusing fast. In theory you shouldn't be having to saturate images to make them look right. I have a feeling that perhaps there's something not set up quite right in your workflow. Resolve can manage different displays, so it can separate the timeline colour space from the monitor space which is really important. On my main workstation i have a 5K GUI monitor which is 709 but a properly calibrated P3 display going through a decklink card. So i am managing two different monitor set ups. So first you choose which timeline space you are working in. 709 is best for most cases, but if you have footage in P3, motion graphics in P3 or are targeting apples ecosystem then a P3 timeline can be helpful. P3 gives you access to teals and saturated colours that 709 cannot but *only* a P3 display could show you those. The way you monitor is independent of your timeline. You could have two monitors plugged in, one it 709 and one is P3. Each of those monitors needs a conversion out (in Resolve settings). If your display is P3 and your timeline is 709 then a correct conversion to P3 is needed so you see the 709 colours properly saturated. If your timeline is P3 and you have a 709 broadcast monitor then that conversion has to do some gamut mapping or clipping to show the best version of P3 it can in a limited colour space. This also applies to exporting images. The vast majority of jpeg stills are 709. The vast majority of video is 709. But apple will tag images and movies with display P3 when it can however universal support for playing these just isn't present, So you kind of have to work backwards and say, where are my images going to end up.... cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

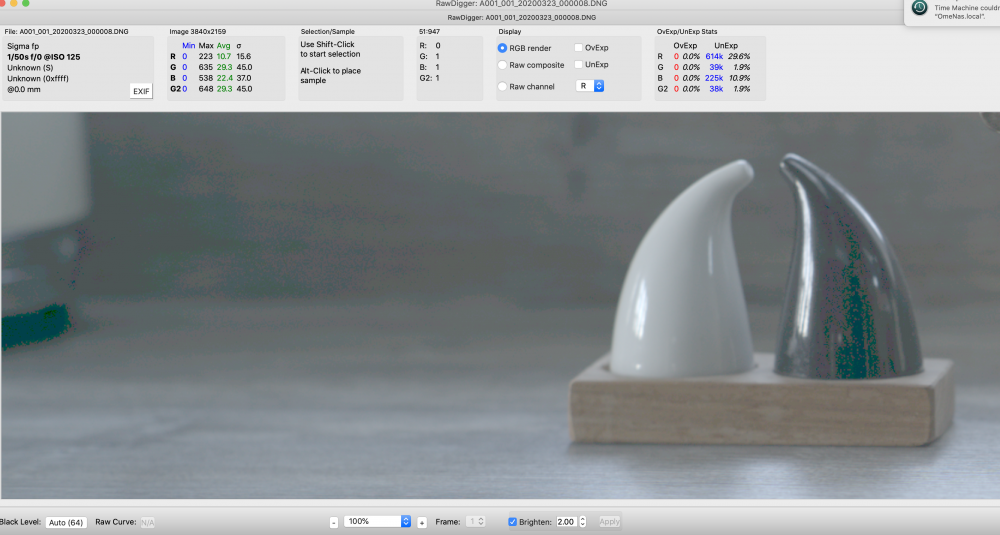

Just double checking but yeah, the green/magenta stuff is in the actual DNG itself. No question. What's happening is perhaps a truncation issue converting between the 12 bit source and the 10 bit. The green values are rgb(0,1,1) so no red value. This is the very lowest stop of recorded light either 0 or 1 I think this is quite common because come to think of it he A7s always suffered green/magenta speckled noise - and that would make sense that the bottom two stops are truncating strongly and the with noise on top of it. What sigma *ought* to do is to balance the bottom two stops and make the equal grey because the colour cast with a single different digit is quite high. This could be what ACES and BMDFilm is doing. I still say it's a bug but if you go from having 16 values to describe a colour to 1 value then any kind of tint means that when it's truncated it will be made stronger. But i think i understand better why. Also @Chris Whitten if you see the image stats you can see that the highest recorded value in that DNG is 648 and this is a 10 bit container so there are 400ish more values that could be recorded here. I know this is an under exposure test so this is why in this case!! (Not teaching you to suck eggs i hope!!! Certainly don't mean to come across like that) RAWDigger is an awesome tool if you want to poke around inside DNGs!!! Highly recommended!! cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Of course. Use info@sigma-photo.co.jp Kindest Paul