paulinventome

Members-

Posts

162 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by paulinventome

-

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

IMHO most likely crappy debayering combined with some serious 'clarity' adjustments in that last shot. Whilst i'm all for destruction testing images i also have to balance with i don't shoot like that. I'm more interested in the things that do show all the time, tonality and range. If you ever get to push lightroom around on high contrast edges check some really odd debayering artefacts - and this is supposed to be pro-stills level. The beauty of debayering is the ability to choose different algorithms depending on scene - of course no one actually offers that... cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

The charts and the sharpness tests are tough metrics and not real world either. AFAIK the downsampling is in some way to minimise moire because there is no OLPF. You can't really have an OLPF in a camera that switches modes or does stills. I've seen plenty of shots ruined because of that from some quite well known 'prosumer' cameras as well. And BMD is quite notorious for this. They score well on tests but not so much in real world. But the sigma image is quite soft. What the slashcam shows though is a difference between crop and normal and that's interesting. So full frame is downsampled but then it's also fair to say the downsampling isn't an even number and maybe that's why we're seeing these kind of results whereas the S35 mode might actually be putting the sensor into a windowed mode and that's worth looking at more. The 12 bit footage - i strongly think if you are recording 12 bit linear to push the image as high as you can and bring down. Because that really is linear, the brightest stop of light will show nearly 2000 steps of gradation. So push as far as you can to take advantage of the tonality. Of course that's not always possible. The 8 bit footage less so because they are reshaping the data so that top stop takes much less space. Again i'd really hope for 10 shaped bit from 12 bit linear. I do believe if this is the IMX sensor then there is a 4K 14bit crop mode which would be awesome. But with no OLPF then as an overall format you'd see much more aliasing. Lightroom is quite a bad debayer - i see artefacts all over the palace. Resolve is a bit better. SpeedGrade was poor. And nuke used to have some really good options in there (but those got 'upgraded') Motion RAW is quite a bit more difficult than stills RAW and i don't think there is a good DNG option for debayering out there. It would be possible to use temporal debayer but i am no aware of anything like that which exists... Also the reason why it seems better to push the shadows that bring the highlights down is something that just occurred to me on a long drive back. The 12 bit is just truncating the top stops. The whole camera exposure is the same for stills vs cine. So same settings like for like, but the cine has the 2 bits chopped off. So therefore the expectation is for there to be highlights compared to mid grey and it's not quite there. That 14 bit 4K mode would be perfect! cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Scene dependent really and what you're looking to achieve. - Outside with clouds - you can set zebra to 100 and when the clouds clip you can recover some of that because the green and red channels won't have clipped (getting a RAW clipping indicator for each RGB channel would be really useful) - If it's dark and you have latitude, better to expose up and bump up the ISO and then take that down to get cleaner shadows - But if you're going to clip the highlights you really need, then bumping up the shadows will the best bet - as you say. In reality it's probably safer in a full dynamic range scene to expose 'properly' and have a bit of latitude for moving stuff around. Just be mindful that the shadows, at the sensor side, have progressively less tonality the lower you go and all sensors are like that! cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

I missed the question about scaling. I know from sigma themselves that the whole 6K image is read and is scaled down on the camera. This is also done to help reduce any aliasing as there is no OLPF in front of the sensor (which would make it a terrible stills camera). Trades offs. Is it worth it? Well i got one as a B Cam and i can rig and do things i wouldn't be able to previously. It's an amazing image. Basically a sensor in a box. I don't know anything else in that price range. Really awesome little camera. Downsides? - You do have to understand RAW and Post workflows - The firmware can be improved and hopefully sigma will improve the tonality (there's nothing technical stopping them) - The screen isn't articulated. - It's not very ergonomic to hold. - The VF makes it very easy to focus (i have it now) but adds bulk and of course it's in a fixed position you can't look down at the camera, always in front of your face - No mechanical shutter for stills (but not been an issue for me yet) Lots of SSDs needed and high capacity cards! cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

AFAIK it's a mode on the sensor itself so the data coming off the sensor is binned in that mode. This is why the rolling shutter is so much better in that specific mode. It is also 12 bit even to SD Card. However the FHD can show aliasing on smooth lines IMHO you are better shooting UHD (even in 8 bit) and downsampling to HD in post. Much, much better result assuming you can achieve the frame rate you want. The sensor (if it is the one we all think it is) does have a 4K DCI binned mode in 14 bit - this would be really nice to have. Otherwise all the cDNG formats are 12 bit max, so you will only see 12 stops max (real stops) from the image. I have tested stills DNG with cine DNG and can quite clearly see the missing stops at the top end. I would say it's closer to 1 to 1.5 stops difference. But.... If you look at the S1H for example (same sensor perhaps?) in 6K mode C5D is claiming 12.7 stops of range. Well the difference is that the S1H is *probably* doing highlight reconstruction as part of white balancing and baking out a movie. This would yield a few more stops on a wedge chart (it's ideal for wedge charts!). It would be interesting to see a RAW test with the S1H. But the fp will get you the same range, with reconstruction (Lightroom does it anyway and resolve it's a checkbox) perhaps more. Colourwise it's good. I can match Red footage but the Red has more range, at least 2 or 3 stops more on a practical basis. I've shot side by side through ranges. The ISO matches fairly well as well but i would tend to expose the fp slightly higher when possible to protect the shadows. As for those Videos - RAW is not simple to handle properly - simple as that. The second video shows the guy really has *no idea* what he's talking about or understands what RAW is and sensors in general, it's almost comically bad. The bad blacks - it's difficult to say without seeing the RAW he shot - i suspect it is massively underexposed and he's pulled the shadows up. The thing about RAW is that with no compression you will see each pixel the sensor saw. As there is no noise there i guess the ISO was very low therefore probably just failed to expose correctly. Because it's linear - as i keep saying - the first 3 stops are represented in 7 code values. Usually compression just screws this totally and they're expanded out and noise from compression hides this but this is what the sensor (any sensor!) sees cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Yup. Recording RAW is a pro choice, there's no way around that and for holiday videos and snaps as you've found, isn't ideal. Maybe once they include some kind of log recording through firmware it would be better suited to that. But i see this as a sensor in a box and you build everything around that. The cinematic DNG test that Lars posted back there is perhaps some of the best footage that's been publicly shown from the camera. These are very challenging scenes for any camera and the fp does a fantastic job. However their post workflow isn't ideal - i see exposure wavering between frames which is either a factor of the firmware version they are using or the fact that appears to be batch processed through lightroom. The native gamut of the camera is certainly beyond 709, so therefore as i guessed that workflow is going to be the nut to crack. I've shot primaries off a reference monitor in P3 and 709 and can clearly see the difference. So any workflow built around 709 (Adobe) will somehow be messing with the native gamut to squeeze it into the 709 bucket meaning colour clipping or scaling - all warning signs for me that the final image will be compromised. Another reason why the camera isn't for the masses. I personally would not archive DNGs in any log or compressed format but slimRAW is a good choice. I don't see the point of having a camera that can dump out RAW then transcoding to a less format. Just get a camera that shoots in the format you need. This will be sigmas marketing issue. I understand what they're aiming for but it's a small market. Steve Huff certainly has fallen for the stills side and i get my VF today i think, in which case then i can spend some time using it like that. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

But there's no quick way to set that focus point is there? It's a multiple key press to get to a point to move the reticule around and then that spot stays. Very frustrating when you can't really focus off the screen very well. I hope we can get the way this works changed a bit! cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

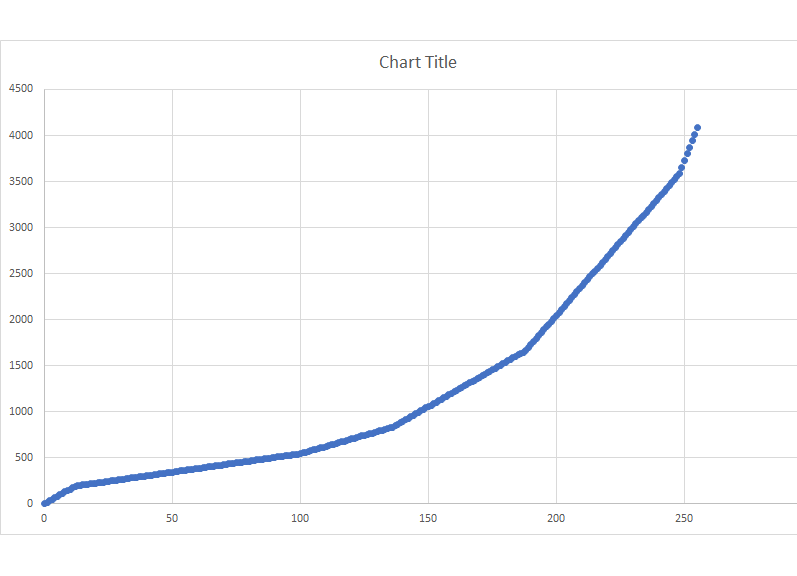

A bit more info for those interested. Firstly, as expected, setting white balance in camera does not affect the RAW file. There have been cameras that have baked white balance before, the Sony FS700 for one used to do that (or the FS7, one of them did). But that does not happen here. Secondly i'm enclosing the image of the linearisation curve in 8 bit. It's a good curve, it's not log but redistributes the values in a way that makes sense. Thirdly. Anyone worked out WTF is going on with focus magnification? So in cine mode, i can assign the AEL button to zoom. But how do i move around the screen to focus on different areas. I would have thought the control ring would (like on any other camera). It is possible - you can do it through elevntybillion key presses. Also pressing the touch screen would make sense but that just puts the auto focus points up... I must be missing something here! cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Red uses traffic light warnings that come on when that channel is clipped. That's generally all i want. I usually don't want to monitor the entire signal i would rather a contrasty image to focus and compose with. But a user LUT would give us both. The problem with monitoring a log signal is that it has been white balanced so therefore no RAW clipping info. If it wasn't white balanced then it would be mostly green! That's the issue - i would like RAW indicators, not after they've been matrixed. I don't know enough about ProRes RAW, i didn't think it was part debayered, just BRAW that was. Just using the HDMI as a digital transport to a recorder that can not only record the DNG data, but debayer for remote display makes sense. I believe the HDMI bandwidth is plenty high enough to take any data off the camera. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

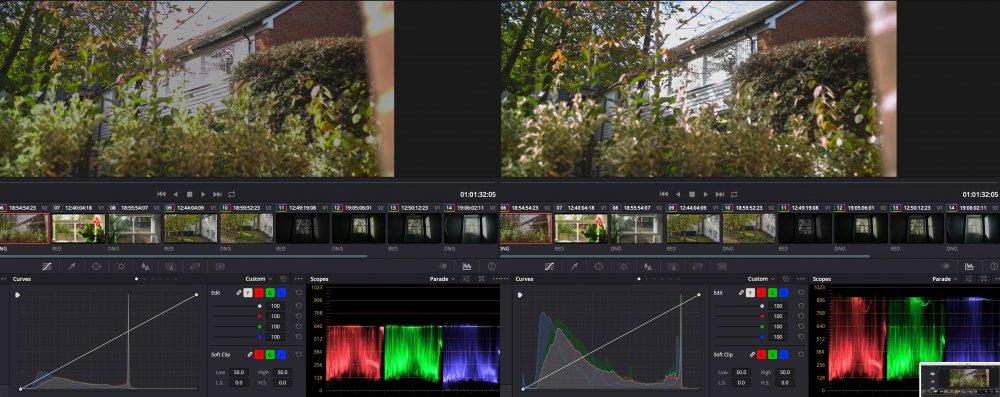

As i understand if atomos will be recording RAW over HDMI then the HDMI signal will be data, not image. The Atomos recorder would then debayer and display with whatever tools they have onboard for that. So monitoring whilst recording would be a function of the display, not camera? (Maybe the camera LCD would display anyway). It's basically just dumping data down the HDMI connection rather than USB. This would be super useful. I would never monitor in log, although i don't think you're saying that? The idea of monitoring through a LUT is great, in the past i've generated LUTs including false colouring for specific exposure regions. But monitoring RAW is more difficult - what i really want is, no matter what the image is showing, i want to see when each of the RAW channels has clipped, simple as that. Then i can make a judgement based off that. With limited bit depth and container sizes the whole ETTR becomes an issue again. If it was 16 bit RAW then sure, do the right thing and expose for mid grey and give the editor a break but i feel with this camera that highlight exposure becomes important again. The enclosed screenshot show the difference with highlight reconstruction on and off. I knew that not all the raw channels had clipped so was pretty confident about this. If this was a log format then you'd be dealing with baked in white balance and you would not get these highlights. So for monitoring, happy to have an image to focus by, but with the knowledge of what the actual sensor is seeing. The image is stress testing, i'm side by siding with a Red Epic-W so i can find the best mid ground settings between them. The fp is a fantastic little camera, no doubt. I'm having issues with colourspaces though (workflow i think) and the range is not anywhere close to the Red. But for its size and cost it's amazing! The ability to do this reconstruction is scene dependant. You basically need to have a spread of channels where one or two may have clipped but one hasn't. Skies are great for this as red usually doesn't clip so much. Some cameras employ a trick where one of the G pixels is less sensitive too. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Yes, drop them a line and ask. That's what i mean. Doesn't have to be log, the values they choose can match the data better - it could be a log with an s curve to distribute mid tones better. We will never see that curve, we will only ever see the results after the curve. Whilst the DAC is 14 bit i am pretty sure that the mode they are running the sensor in is 12 bit output for motion. And a 10 bit DNG with a linearisation table would hold everything a 12 bit linear file could - i see no reason for a 12 bit option at all. I think 4096 across should be an option though, even if it's scope (4096 x 1716) or 2:1 (netflix style 4096 x 2048) - the overall data rate would be roughly the same as UHD. I think a couple of wish list items. Yes, a user LUT to output baked formats would be useful in some cases. But tools to expose for RAW better - i'm still trying to wrap my head around the zebra at 100% and what that actually means. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Nice find Lars. Log profile is utterly irrelevant on this camera and it won't expand the dynamic range. You have the RAW output - you will never get better than that. This is the whole point of this camera... RAW via HDMI is a nice idea. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

I've been pushing and pulling the 8 bit DNGs in lightroom, comparing to 12 bit FHD and also Sony A7sII stills. Whilst i can see the limits in the shadows, as there are no compression artefacts, then what you get is remarkably usable. Pushing something 5 stops over to compare shows the 8 bit DNGs really not that far from the Sony RAW and 12 bit versions. So i'm running to a 128GB SD Card (ProGrades) and have yet to really break the image. This is less to do with the sensor and more to do with no compression. That's the key, compression kills everything. So i don't know what you're planning on shooting at the moment i'm skewing towards 8 bit. 10 bit linear is no use whatsoever. 12 bit 24p would make a difference but i can't use 24p As i mentioned above, if we users can drop sigma a line and show how much we want 10 bit DNG with a linearisation table then that would be the sweet spot and i would externally record. If we're lucky they could experiment with writing 10 bit to SD card as well... And maybe do some other frame sizes... (4096 DCI widescreen!) cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Thanks Lars & mechanicalEYE Yes, for some reason they're not in stock in the UK, so waiting on a backorder. Even so, having a whacking great VF at the back kind of negates the beauty of such a small camera. More so for stills - i was hoping maybe it would replace my A7sII but not so sure now. For cine use this would mostly be rigged onto things, especially as it is so light, so it's not so much of an issue. Might even be rigged for wireless video and FF. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

I succumbed and bought one. I will test more fully over the next week. Ergonomically from a stills view it's very much a urgh. No EVF makes life very difficult for me but i do have the VF on order so maybe that will change things. Cine mode. DNG. It's heaven. This is all you need from a camera. I've side by sided UHD 8bit onto SDXC vs A7sII UHD Slog2/Sgamut and there's no joke. It's almost comical. The low end and the highlights are literally playing in different ballparks. What crushes the A7sII footage is the compression. Simple as that. So that 8 bit UHD is remarkably good. I'm pushing and pulling it and comparing to the same thing shot FHD in 12 bit and it's incredibly robust - so much more than it should be. Over the next week i'll push and pull properly. I got it as I needed a lightweight B cam to my Red for a shoot - so i need to intercut and i'll be finding it's comfort zones side by side with everything. So far the best resolve post in in ACES. My fear with going into any 709 space is that the camera native spectral response will be outside of 709. All Sony sensors push red way out and it's easy to see with bright red subjects. If you don't debayer into a larger colourspace then those reds get clipped or mushed into gamut. I don't know enough about DNG support in Resolve but visually into ACES appears much better. The only other valid option i think is Linear in, certainly none of the BMD Presets as they're for very different sensors. Cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

This all largely depends how you have colour management set up. What colourspace is your timeline set to? For example most of the grading controls, especially secondaries, are really designed to work in gamma space (like 709 or similar). If your timeline is set to a log space then pulling keys is very difficult. What you can do though is right click on each node and choose a custom space and gamma on a per node basis, this is a godsend if you're mixing approaches in a single tree. Gaining in log space is different to gaining in Linear. Printer lights as a concept AFAIK are really a linear gain operation. In log space Add is the same as gain in Linear but log curves usually have a toe in which case all bets are off. Whilst linear space (32 bit float) can bring it's own issues, if you are doing real world photographic operations, exposure, blurs and so on then linear is the only space you should be in. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

I can imagine. I find it very difficult and unnatural to shoot via a screen these days. I agree and find it an odd move to not even make a nod to an EVF connection at a future point. I think that will be a negative for stills photographers and it's a bit expensive for the point and shoot crowd. But once i hear back from sigma about the nitty gritty of what they may alter in firmware with the recording formats i will decide whether to grab one as a B/C Cam. How bright is the screen? @Lars Steenhoff That's a nice looking video for once, sigma should really push that one. Although i see flicker in some of the longer shots and the grade is a bit all over IMHO but certainly one of the best ones so far. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

I'm sure that optical one works well. Just that it's not really in line with having a small FF camera. I can imagine for video work it makes more sense because you have a point of contact to help but for day to day stills not so much. The 3rd party ones are all too big, i'm looking for something like the Sony EVF, a tiny attachment that wouldn't be out of place on that body. But i cannot see a HDMI based one, maybe the HDMI hardware is too big. I think once used to an EVF it is difficult otherwise, both for stills and cine shooting - especially in daylight. I fired off a bunch of tech questions to sigma and they've apologised for taking so long to get back to me, so hoping to hear soon. Then i will decide whether to grab one and see for myself thanks Paul What have you heard and from where? It could be the case but the Jury is out on that. I am assuming the mode they're running the sensor in is 6K FF at 12 bit (from the sensor spec sheet). If they were binning/skipping i'd expect better frame rates. So i hope that they are reading the whole sensor, then downsampling then bayering and storing - hence the lack of OLPF. I think their bottle neck is data transfer speeds - hence thee 8 and 10 bit DNGs. Guessing this is the beast https://www.sony-semicon.co.jp/products/common/pdf/IMX410CQK_Flyer.pdf You can see the HD and 4K modes but i have no idea what they could actually be doing inside. Also from that spec sheet it isn't clear if Active Pixels mean a crop of the sensor - i sort of assumed it did. cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Reading between the lines of how they're downsampling 6K to 4K i suspect it will help with moire situations as well. Certainly in the DNGs i've seen, with water and highlights it seems remarkably smooth. C5D had a frame with moire though. One thing i find out the image so far (from samples) is that it's naturally quite soft, in a nice way. Not digitally sharpened or anything. The sort of things i like about Reds for example. I'm close to just biting the bullet. On a practical basis though the lack of an evf is my biggest issue. I don't know how that loupe thing will work and whether it's practical or not. I like the size of the camera but going back to focusing off a screen in daylight... Rather not. Are there any small HDMI based 3rd party EVFs? cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Thanks so much, others have dived into this earlier and i'm still looking. @rawdigger The 8 bit file is not linear, each DNG has a linearisation table which is the correct thing to do. I wanted to find out whether the 10 bit did the same and it seems that it might not. This means ironically that the 8 bit files in day to day use are probably going to to better quality (make better use of a limited format) than the 10 bit. As we know in linear the very last stop of light uses half the available values and the first 3 stops are represented by just 7 different values... If there is one thing we should do - it is to petition sigma to encode the 10 bit files with a table like the 8. That way we can achieve the same sort of quality as the 12bit files. Of course this is assuming they are not reading the sensor at this point in 10 bit mode which is one of the sensor modes possible. IMHO this is a show stopper for me getting one as a B cam. 12 bit is only 24p and i need 25 at least. I am waiting for clarification from sigma themselves but if others can chime in then maybe we can get them to consider doing this, i can't imagine it is *that* much work. As for downsampling i am guessing they are scaling the RGGB layers before bayering it. I think by doing this they are making up for a lack of OLPF which is why the detail is lower. I am so close to getting one as it's really what i am looking for, a sensor in a box that gives me pretty much what it sees. But there are a few caveats to sort through! cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Thanks for the DNGs, it's really useful to compare the same frame in different modes. The two you did show that it is scaling the full width of the sensor and that the shadows in the 8 bit version are green. But overall a really interesting comparison. It would be super useful to do that with something that has dark to clipping in the scene and 8, 10 and 12 bit with a 14 bit stills control... But yeah, a bunch of work that you may have better things to do with your time! Cheers, Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

There's a 3.5mm jack for mic input. Normally you'd stick a tentacle or similar in there for time code sync.There's no headphone though but if you can get timecode into it then that makes it very easy cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

I think the HD ones look very soft. I know you've some super shallow DOF going on but compared to the 4K it's quite noticeable. I guess this is a factor of either the binning or scaling in camera. Not wanting to sound like a broken record - but have you tried 10 bit? After all at 4K the only way you'd get 25 or 30p is via 10 bit at UHD...? Also as a stills camera if you shoot fast action how bad is the rolling/electronic shutter? cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

To be expected, but have you had a chance to compare 10 bit, with a decent curve that could be as good as 12 and has more frame rate options Those new videos above show some horrific highlight clipping - i wonder what the workflow was with them... cheers Paul -

Sigma Fp review and interview / Cinema DNG RAW

paulinventome replied to Andrew - EOSHD's topic in Cameras

Actually having the RAW files in vfx is very useful, i can develop the RAW in different ways to maximise the keying possibilities. Producing images that look awful to the eye, have soft edges, but have better colour separation in. So actually whilst a lot of pipelines do work the way you say - they shouldn't ideally.... +1 on working with frames, especially EXR. One benefit is if a render crashes halfway through, you still have the frames from before and can start where you left off. That's a real world thing! cheers Paul