-

Posts

659 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by Sage

-

There were some things that looked more right to me, like the boxing scene. I think that's a nod in the right direction to a LogC+709. Yellows, greens, and clipping for example didn't quite do it for me, but I am on a terrible blue tft laptop screen, which is all I have access to at the moment

-

Sure; it depends on what you'd like. Fuji and BMD have very good, usable color. I personally will use P4K with GH5; I hope to have EC to do a production this summer. 8 bit is tricky; I'd prefer to only support 10 bit cameras, though I will try to find a way that will give viable production level results with 8-bit. I think Log is not really in the cards there. Bit of really cool news; last weekend, the director of a film starring Josh Hartnett wrote to me to say that they were using the GH5 with EC as a pick-up cam alongside Alexa Minis and XTs. He said that a senior Star Wars colorist was doing the grade, and said that 'everybody was very impressed' with it. I was pretty thrilled with that

-

I want to make true XT3 support a reality this year. I would recommend hanging in there Even now, I am still working on the 5

-

Sure; from what I've seen, the G7 had a notably higher gamma curve than the GH5 in Cine-D, all things being the same. I corrected this with a hue-locked downward gamma shift in advance of the conversion. I don't know if this is the same for the G9, but your description of working with it seems to suggest this may be the case. Your results are quite good. There were just a few points of rougher rolloff that I would expect from a reference GH5 pipeline. I'd recommend neutralizing the WB ahead of everything (even the Pre now), and then restoring the cast after everything. This will allow 'correct', clean Arri color underneath, and is the optimal route. I took the dpx and did a slight initial WB from the white window frame in advance of the Pre, and then followed the conversion with a slight luminance drop to conform to day skintone. It was well shot: By way of a silly example, here is a 'blue restoration':

-

100% certain; I would call it the #1 camera. Expect something soon; that said, can you live without IBIS? I think I will keep both Stunning! Wouldn't have thought a G9 would look so good, like no G9 I've seen

-

The part that I'm stuck on is how one would navigate a multi dimensional array from the pointer. For example, is there any way to do something like array * [3][14][7][12]? Someday, I want to learn Python. I've got to master c++ first though. I just bought Stroustrup's Tour of c++ book

-

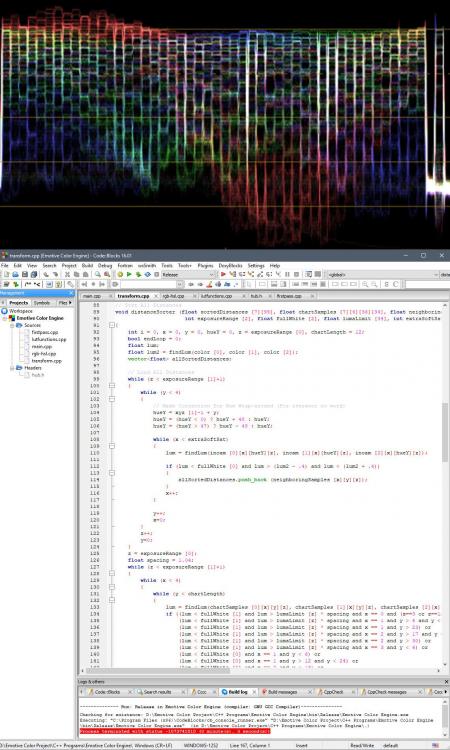

For the c++, there is a problem I'm trying to solve. Giant 4D arrays aren't supposed to be declared on the stack like this, but I haven't figured out how to conveniently pass them to functions any other way yet (vector pointer 'arrays' can only be 2D etc)

-

EC was made by filming a large number of color samples with both cameras, and then extracting coordinate data from those samples and supplying that data to a my own c++ program. As channel clipping occurs, the program attempts to straighten hue lines to follow that of the higher DR camera. There is a point at which those hue lines merge during clipping, such that clipped red is identical to yellow, for example. When these regions are detected, they are reduced to near grayscale data:

-

They look great. Could you share the original HLG still of this one?

-

Hey guys, just wrapped up the shoot I was on. For HLG + Resolve, keep the settings at default. The Pre is the CST, and is preferable as it is measured. One would either use CST/Color Management, or the PRE.

-

Thank you for the kind words! It may be a minute, so be sure to hang on to your 5 for the time being. When a new camera is supported, it must be absolutely, sans compromise (on par with the 5)

-

There are several variables that combine in skintone; color separation, saturation model, skin color, light color spectrum, white balance, lens coloration. Color Separation: Good cameras have color separation. Less ideal color science will have 'accordioned' hues, compressing and expanding arbitrarily throughout the hue circle. For skintone - under quality light, the Alexa will have great separation between the pink aspects of the face (lips, blush) and skintone, which rides the line between too green and too magenta under flat spectrum light Saturation Model: Photometric saturation models (like Alexa LogC+R709 or EC LogC+R709) have less saturation (the video model). Saturation amplifies hue difference, and photometric models can make skin tones seem more alike. The EC variations emphasize saturation (film backend), which foregrounds skin hue Skin Color: The skin of the talent may be slightly more olive (dark and yellow in hue) or pale with pink as the primary hue accent (all vary towards magenta with emotion). Here is a recent still from the film I've been shooting, with two people in the same frame that had divergent skin hues: Light Color Spectrum: On the Alexa (and cameras generally), filming under light that has a spikey color spectum (Leds, Flos, Sodium Vapor, etc.) can cast skintone distinctly to green or magenta, even with correct - White Balance: A fully neutral WB is vital for EC, not only in skintone, but the whole of color space accuracy. Skintone is one of the reliable indicators that WB might be off on the green/magenta axis when under reference light without a white card reference (should anchor on the skintone line of the Vectorscope under sunlight and halogen). A common occurrence is that WB will be set for direct sun on the GH5 (possibly with the WB sun preset), and then the shoot will move into the shade, which will shift the WB towards blue/magenta (needs a new WB, or correction in post ahead of the conversion) Lens Coloration: Interrelated with WB is lens coloration, which is like a WB that variably shifts throughout the grayscale [For EC: it is optimal to WB around middle gray, or a little brighter for the most salient part of the image]. This can vary from minimal coloration (Sigma 18-35) to heavy coloration (for example, Xeens). For NDs - the reference EC ND is the Firecrest FSND, which evenly cuts the full spectrum (visible/invisible). Non FSNDs (even IRNDs) will have a varying ratio between IR/UV and visible, which can unpredictably shift sensor response to a given light (interacting with the camera sensor's native cut). Variable IR ratio particularly acts on the green/magenta axis Notably, EC Tungsten does have a slight magenta bias in the skintone region towards magenta compared to the Alexa. I've figured out what in the data was causing this problem (IR leak every third exposure slice), and this will be solved for the next release.

-

Ah very cool. Looking forward to it!

-

Very cool, they will be very pleased. Do you have any work online? Jamy Hang just shared these, one still from his film, and the others from fashion:

-

Stunning piece from Keith Hammond:

-

Sure, glad I could help! That's unfortunate. @CaptainHook It really needs fixin I have an idea for an in-cam ProRes fix once in hand, but it depends on how things are implemented therein

-

Hey Grimor, can you send me Dpx (with empty Lumetri applied) stills of your raw footage, and Jpgs of the final image? My email is emotivecolor (at) gmail (dot) com. No worries, no matter the non-Panasonic camera I support next, the S will be of equivalent priority and released simultaneously, or shortly thereafter. From what I've seen, the S is a touch warmer.

-

Another factor is, everyone will have the reference NLE; that really appeals to me. Its hard to beat that luma curve + hue lock out workflow.

-

Not a bad way to go! The most appealing quality of Unreal was the photorealism; granted I was one to get a Gtx1080 at release to do a 4k downsample to CV1. Have you tried Dreadhalls? I remember that as being an algorithmically rendered dungeon (unique every time), and it was pretty scary.

-

That's the way its done. I dabbled in that arena with the DK2 a few years back; my feeling was that the new Unreal Engine 4 at the time was the way to go. Its a free platform, with uncompromising graphic fidelity, integrated VR handling, and exposed C++ source if you want. I will be getting back in with CV2

-

You have a point there.. at the very least, they seem responsive haha. I've been having an email conversation about supporting it. The core differentiator is price; the P4K would (theoretically at this point) be a lot more accessible. I like the idea of being able to opt for premium codec/low-light with the P4K or IBIS with the 5 at that price range (perhaps one day, both)

-

That's the plan - they just refuse to sell me one I wonder if they have addressed the red clipping issue with the update? That's my central concern

-

Methinks it would largely work, given what I've seen from other VLog cameras (much more so than, say, NLog). That said, I'd like to do a version for the S1, as that would be fully accurate and tailored to its image.

-

With this style, I felt that the montage with the falling piano motif would be ideally paired with slow motion shots interspersed - the same rotating shots of the characters, but in slow motion, the directional motion of each shot feeding into, and informing the next. Second, for the Adr/dialog at the end, it would be optimal to record in a similar environment to the one shown for the correct reverb (space). Key is placement of the mic relative to the mouth - not too close, but not too far. That is, to have a sense of the reverb tail, without over powering the voice with reverb, or the 'deadness' of right in front of the mouth. For the existing dialog, one could maximize realism by EQing out a bit of bass (~120Hz region & below) and adding a slight reverb tail to add 'space' to the sound (testing reverb settings until it feels fitting for the space on screen)

-

One could potentially 'reverse engineer' the ideal settings by finding the settings to match 4K to 6K's smoothness, and then 'subtract' that from the required settings to match sharpened 4K to the Alexa. The 6K mode is one of the things potentially on my list to look at; I would also need to dial in a PRE for Premiere, as I believe that there will be unique distortion for h265 formats. Also, while I haven't used it, @deezid mentioned to me that there were some compression issues at 6K. @katlis @Wild Ranger Those stills look amazing. I should mention that with digital diffusion, my impression was that it didn't rise to the level of optical diffusion quality. Granted, the last time I seriously looked at the question was a solid decade ago. I believe that when there is already some degree of optical diffusion, digital diffusion can supplement that nicely. The Takumar primes have a nice diffusion, and having lenses with inherent diffusion side steps the 5's inverse sensor reflections problem.