Axel

Members-

Posts

1,900 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by Axel

-

On Creative Cow, someone has found that indeed in a 2020 project the Color Wheels work properly (and really well), whereas in 709 they are a mess. Best advice would be: stay away from the wheels if you are grading for rec_709! Nobody knows what happens when in the next update this gets fixed. You very probably lose all your rec_709 CC for existing projects ...

-

Yes, Don, you're right. It's just funny that everybody raves about the long-awaited wheels without noticing that they offer less than the iMovie-ish Colorboard. Or without admitting it. I asked Mark Spencer in the comments to his Ripple Training, and he answered: "All color adjustments are iterative." Maybe over time we all realize the ingeniousness of those wheels, because getting it right in many time-consuming increments is possibly the most precise way to do it. This reminds me of a children's book of mine, in which two guys want to share a sausage. The smarter guy says, it's not exactly half, let me bite off the difference. In the end, of course, he has consumed the whole sausage.

-

A serious warning concerning the new Color Wheels. You might already have sensed that they behave somewhat weird. Now Simon Ubsdell has published a video explaining that they don't use the traditional lift-gamma-gain controls (like the color wheels in Resolve, in Apples Color, in Lumetri and in every other CC program). This renders the tool completely useless to efficiently adjust contrast in your image, you still need the Color Board for that (or set the necessary limitations to the ranges manually in Color Curves). Comments have been deleted over night, not sure why. I posted this on fcp.co yesterday, and the post was almost completely ignored. I think everybody should be aware of that.

-

You didn't mention if you edit with FCP, which uses AVFoundation. Since Yosemite, allegedly, the whole OS (and OiS) uses it as framework. And indeed, Finder / Preview / QT player now play back a greater variety of video files (they used to reject naked, unwrapped mts-clips, for instance), but specifically not some of the 10-bit GH5 files (and others). FCP accepts them nonetheless. The very same version of the third-party-app Kyno used to repeat the Finder warning unsupported file type, unable to play back (or so), but now miraculously (just after a restart of the app) shows them, you could say reluctantly. HLG clips are still not supported in Kyno 1.4. Don't know about EditReady. As far as transcoding with third-party apps to ProRes is concerned, I became aware of possible downsides since the once popular 5D2RGB transcoder, which for none-Canon cameras often didn't interpret the range correctly which resulted in degraded clips. Many players (=decoders) - like i.e. VLC - accept the underlying H.264 but ignore proprietary metadata for, say, XAVC with it's own color science of x.v. (or whatever is implemented). Many wondered why Premiere first played back GH5 10-bit clips, then the following update didn't anymore, and then the next update officially supported it. Official support means (or is supposed to mean) that the data are interpreted correctly. It may very well be that if you don't optimize using FCP but instead EditReady, you end up with a cropped set of data: Furthermore, 10.4 on High Sierra also supports (or so it seems) 5k HEVC from the GH5. Of course, unless you have a 10-core iMac Pro, you'd be wise to optimize: I too love Finder tags. Those and MacOS smart collections (more powerful "folders") make my life really easier. But I think FCP is the best app to preview video. It has a skimmer, it has tags, just everything is better than Preview. How does that help me to find my clips in Finder later on? This is where FindrCat comes in. I think it's some 25 bucks or so, can't remember. It's a riddle and a shame though that FCP tags don't become Finder tags automatically!

-

In between holiday duties I just watched all FCP X VUG recordings on Youtube. In the recent one (jump to 56'53"), the question is whether you can use a Blackmagic 4k interface to bring HDR to an external monitor. Mark Spencer says, no, it has to be the AJA i/o. In order to set the flags for PQ vs. HLG and the brightness peaks right or whatever. That's telling me, become sober! Imagine you buy such a device and you buy some so-so HDR monitoring display right now, that's a lot of money. And soon afterwards (according to a site recommending the right time to buy new Apple hardware through average product cycles), that means in the first half of 2018, a new line of iMacs is promoted. They might actually have HDR-capable displays. These may not be top-notch then, but have the advantage of an all-in-one system. They'd cost little more (maxed out) than the external stuff available now. Imo it's best to be patient, keep an ungraded version of any LOG project that's likely to still be of interest next fall/winter, and in the meantime follow the efforts of the early adopters. I do not even have an own HDR TV yet ...

-

Are these solutions good enough? And isn't good enough merely an euphemism for actually pretty bad? Time will tell. As we will get accustomed to see HDR images, we will later, probably years later, be prepared to compare and judge them. That makes early adopters brave pioneers. The more I learn through articles like these, I realize that my hopes of getting started with the bare minimum are naive fallacy. The point where I land with a smack is usually when someone introduces monitor calibration to the discussion. A long and winded rabbit hole with the conclusion that I can only come incrementally closer. Some colorist (van Hurkman? Hullfish?) said that to be aware of the problem was more valuable than to have access to a (sort of) perfectly calibrated monitor without an inkling of what that meant. In the FCP X thread you showed that it's by far not as easy to know all variables to keep the correct color space conversions (another horrifying term) in the 'pipeline' as it should be. I keep reading and commenting, but right now I feel confused and frustrated. I hope, not entirely unselfishly, that your efforts pay off and that you then can explain how you got there!

-

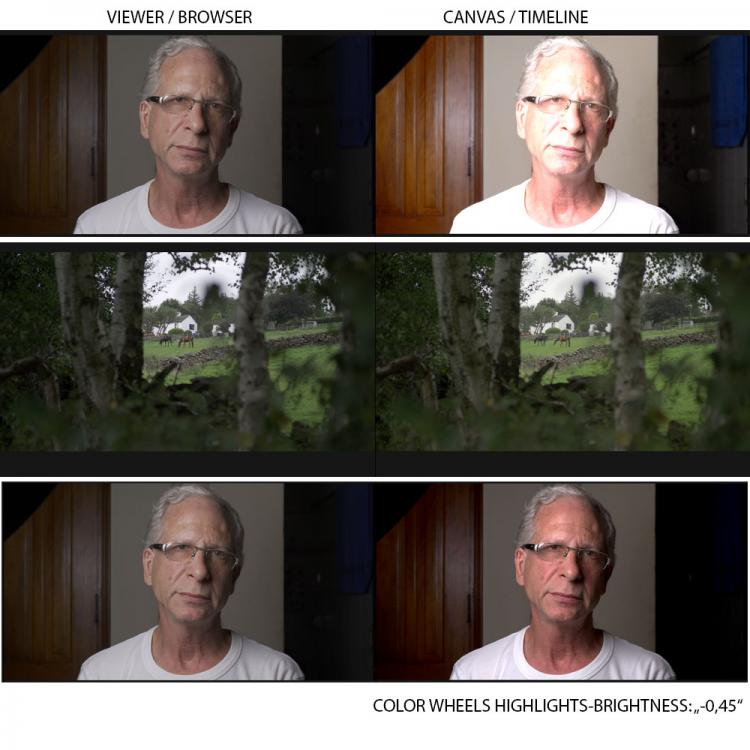

@jonpais I couldn't do the download right away 'cause I'm the family's driver to the Christmas places, now everybody takes a short nap before the next ordeal. You are right: everything looks completely blown out in the timeline, while quite normal in browser. So this is HLG? Because I wonder: with Andrew Reids two HLG clips (horses on a meadow), there is indeed a *very subtle* brightness/gamma change between browser and timeline, and the clips looks much less flat than i.e. the V-log sample. The values are spread (almost) perfectly between 0 and 100. In your clip values that obviously belong between 60 and 100 IRE are *all* flatlined at 110! However, with one single correction (see attachment) I could normalize these highlights, with the exception of the reflection in your glasses. How come the differences between your shot and Andrews? It's a riddle to me. You think it's a bug? In a rec_709 project the values should be crushed below 100 automatically? Like I said, I remember having seen the same extreme exposure of one of your clips in 10.3.

-

@jonpais Wish I could help. I must admit that I'm still confused about the correlation between the settings (preferences: raw values, library color space, project color space, HDR tools). I have a lot of LOG footage (own slog2, own BM Pocket LOG, FS7 slog3 from my buddy, downloaded Vlog and HLG clips from GH5, some C200 clips had been RAW but sadly converted to ProRes444). Some were radically ETTR'd and surely look overexposed in the browser. However, I can stuff the values between 0 and 100 in the (rec_709) waveform, and nothing looks blown out, and certainly not at 90 somewhat IRE (BTW: you accidentally found the 'custom reference line': when you mouse-over an IRE-value in the waveform and then click, it sticks). You just wrote you give up already, but let me/us try to replicate the issue. I'm quite sure I had recently downloaded the clip in question (or a very similar one), called "Sigma 30mm f3.2". I can't remember where I found this clip, and whether it was V-Log or HLG (browser history deleted through clean install for HS the day 10.4 came out), but it is the most extreme clip on my whole system in that it looked so very much overexposed from the start. That was in 10.3! However, if I use the whole rec_709 pipeline, I am able to make it look normal in 10.4 with highlights hitting 100 (adjust highlights and shadows). alt+cmd+b totally fucks up everything, unuseable. FCP-Internal LUT "Panasonic V-Log" makes it automatically look pretty normal, if somewhat oversaturated to my taste, but with 'legal' values. You should let us download the clip in your screenshot and guide us through the process. New territory. Who can appreciate WCG or rec_2020 in 2017/18? Honestly, I don't know. Can't tell for sure if there's a noteworthy visual difference on my own P3 display ... And I'm sure you can do that in 10.4. Let's get to the bottom of this together. My buddy just ordered HDR interface and the Dell HDR monitor. He is on Windows and doesn't have FCP, but I'm curious to see how far he will get with Resolve. If his images will blow my mind, I know I will never ever want to be limited to rec_709 (although my wedding videographer's clientele isn't asking for HDR).

-

If those are „custom camera LUTs“, don‘t use the *effect* „Custom LUT“, but instead go to >inspector >general, load them there permanently and make sure they‘re designed for the color space you‘re using. LUTs for rec_709 look weird in WCG rec_2020.

-

This is very exciting! The new CC tools are so well thought-out (have so many "hidden" features, like eastereggs, see the Ripple trainings on Youtube) that playing with them is big fun. They are precise and powerful at the same time. You can grade in no time, and you can as quickly make your pathetic 8-bit stuff fall apart completely. Made me wonder if that was actually 32-bit floating point. But I did the same operations in Resolve, and the results are the same. It's just so tempting now to give every single object in your image exactly the hue, saturation and brightness you have in mind! Does anybody know a link to C200 raw footage? Would like to play with that ...

-

Are you sure? Please share with us the way you connect your Inferno to your Mac. Someone recommended putting a BM Decklink Mini 4k into an Akitio Node Lite housing, which would be ~$650 instead of $2500 for an AJA box or BM 4k Studio Extreme.

-

Apple says so in it's "FCPX - what's new?" But what about: (specs for 2017 iMac 5k) ??? Could it be that easy? But I don't think so. There is an in-depth tutorial for the new HDR-tools in french. To be honest, I understand only about 40% with my french back from school: Doesn't this look just gorgeous?

-

Now downloadable from Appstore: 10.4. Upgraded to High Sierra with APFS today, clean install. No glitches detected so far. New version feels more responsive. Only plugin: Neat (seems to work, but slow as usual). Existing libraries need to be conformed. So beware - a one-way. But no cul-de-sac. CC tools make me believe (after an hour of playing around) that I won't use Resolve anymore. Apple stole the HSL curves, which I use the most anyway. To monitor HDR, you need very expensive third party hardware, so I skip this for now (long thread I started a year ago). But I was right with one thing: Apple promises an easy SHARE option for various distributions. If PQ (HDR10) needs two versions (one in SDR), Compressor exports the film as a bundle, allegedly fully compatible. We'll see.

-

In a museum, living "paintings": I am very interested in this. Am working on an elaborate storyboard for a short for months now. A sequence of scenes, each told within one frame, taking "every frame a painting" almost literally. Like a slideshow of cinemagraphs, some stills with sound, some extreme slomos, some smooth timelapses, morphs. You can combine various techniques, use burst mode to capture raw images, make comps, CGI. Very exciting indeed.

-

Is a6500 1080p footage Better When Recorded Externally?

Axel replied to Mark Romero 2's topic in Cameras

I have the smallHD Focus. Helps with exposure also through false colors. I don't worry about the said car tire shadows, but you always want to expose skin correctly. -

Is a6500 1080p footage Better When Recorded Externally?

Axel replied to Mark Romero 2's topic in Cameras

There are certain circumstances in which UHD @100 mbps is a problem: Slog. Even if you bravely try to ETTR all the time, in the real world and in situations of wide dynamic range you'll nonetheless sometimes capture the noise floor. Not necessarily shadows beneath car tires but for instance parts of faces that happened to be heavily backlit. This noise then clots together as macroblocks with banding. You could live with a little noise, but those areas ruin the recording, and you usually see them when it's too late. The GH2 once had all-intra hacks with absurdly high data rates (like 172 instead of 24 mbps), and the fans raved about the added detail. But there wasn't more detail. The hack just preserved all of the noise. The shadows looked more natural, and if there was too much noise, you could perfectly Neat it. A ProRes capture means less compression artifacts, so I wouldn't say it's completely useless. Would I want to schlep a recorder on top of my compact little camera? Definitely not. I try to expose better, and I accept around 5 % rejects where I failed. -

My Mac does, but it uses Quicksync - and so does Resolve, obviously. It's a matter of configuration-optimization or so, I guess. I wouldn't worry. If you didn't already, use the one-month trial period of Kyno. The idea is that you browse your card, preview the clips, set in- and out-points, subclip them with "s", rename them, whatever, and afterwards batch-convert them to ProRes, which happens way faster than with Resolve. Kyno doesn't "import" anything. Yes, this does eat more disk space (depending on your shooting ratio of course), but you have your footage organized. I don't know for sure, but I think this will also be the perfect solution for the 10-bit clips which the free Resolve doesn't accept, for what reason ever ...

-

Doesn't mean lack of appeal or interest, or at least I don't think it is. I discussed the matter with my buddy, who does a lot of corporate video stuff. I showed him the Alistair Chapman clip, and he said, well, it seems I'll have to invest some 20.000 bucks. Why, I said, you have the FS7, that's 10-bit Slog3 (probably getting the FS7 Mark ii's rec_2020 profiles soon via firmware update), you want to sell the Shogun anyway to get the Inferno, what's the problem? He said, if you are working professionally, you can't have your client stare on a field monitor. And don't be so naive to think it stops with a monitor! What else? I asked. But I knew the answer already: you can't know in advance. Things you never thought of in the beginning are suddenly missing. It's like a law. For myself, occasional wedding videographer but actually a hobbyist? Could very well mean I had to sell the whole A6500 ecosystem I barely used in earnest and buy he GH5 instead with all necessary accessoires. Inferno? Wouldn't carry that around, no way! Borrowed the Shogun once and found it too bulky. Little to gain from ProRes over the GH5's internal codecs also. And then of course a TV set. A few thousands! Apart from these worries, I think I'm a born HDR guy. The above Ursa shot as well as Neumanns GH5 sunset I graded "with HDR in mind" - paradoxically by accentuating the shadows and carelessly letting the upper highlights clip. At first look, this seems to contradict the common understanding of what HDR means. But *just* looking for detail in every part of the image, preserving highlights at any costs for rec_709 asf. doesn't result in a convincing image, imo. I am playing a lot with Blender, and I like the CGIs the most where light is the star, not millions of dispensible fabric texture details that often distract more than add to the overall impression. I'm setting my hopes in FCP 10.4. Not sure if the (few) "HDR tools" will enable me to preview some sort of basic HDR with my 500 nits display, it's probably wishful thinking. As I have learned by listening to FCP experts, the new CC tool integration will be a dream. A special inspector tab for color where they all live, with a set of very comprehensive tools that allegedly surpass the 3d party plugins available so far (minus, alas, a tracker) ...

-

It cannot be THE same, however. But it must be sufficiently similar, so that it doesn't present an unsurmountable challenge to offer an additional profile for the A7Rii and all the other vintage SDR crap cameras. Unclear specs everywhere. My display (iMac 27) has 500 nits brightness, allegedly closest to P3 as well (Larry Jordan explains the P3 - related points here), and it has a well-hidden preference: >system preferences >Displays >Display - then alt-click on >Resolution >Scaled - and a new checkbox appears below Automatically adjust brightness, and that's Allow extended dynamic range. Found out accidentally (using alt+click often shows hidden things), but don't see how this relates to anything.

-

If I recall correctly, it's a broad enough color space for 1080p, whereas 609 was okay for 640i, 2020 being appropriate for 2160p and above (afaik it also includes at least 10bit, HFR and HDR). How do you say "709". Seven hundred and nine? Or seven o nine? Then for my foreigners' ears it almost sounds like someone from the very distant future: Because english is not my native language, I couldn't tell. I do hear a difference between, say, Alex Jones and John Oliver. This thread turned out to be an HDR seminar. I think that those who followed it have a deeper understanding and a better overview by now. jonpais, will you report on your Atomos experiences? You could write, edit and sell your own E-book about HDR. And hurry, before Wolfcrow changes his mind about the topic and does just that! This kind of answers my repeated questions how 709 video would look on an HDR TV: more or less the same as on an SDR TVs, probably with better blacks. With the resolution race, new standards had to be sold by proving how poor the lower resolution looked in comparison. For early adopters (consumers or producers) HDR stands out without such direct comparison. But the day may come when some couch potato says, why do they still broadcast all this dull SDR stuff ?!!?? You can't, of course, make your own stuff futureproof with technique, but only with content. According to Stephen Hawking, he isn't. He will either freeze to death, drown or will be eaten by CGI wolves on a boat in the streets of Manhattan. Raising the brightness (and power consumption) of TVs won't prevent that.

-

Monitoring is one nightmare, recording the other. Van Hurkman does say that HDR was no excuse for buying a new camera, as long as it could record LOG, but this is probably true if you have an Alexa, an Epic or at least an Ursa sitting on your shelf. It seems as if stretching 8-bit LOG (at least that without HLG-mode) to 2020 values will result in banding and posterisation (by which I mean colors in the upper midtones are noticeably thin). Pocket ProRes (which is 10-bit 422 with up to 13 stops DR) looked better than RAW (too many artifacts). As of yesterday, there is another option of 4k 10-bit with good DR available: the Micro Studio 4k got a firmware update and now allegedly holds 13 stops as well. My buddy has three of those for live events, and he has the Shogun. Have to give it a try. The upcoming Sony A7Riii features HLG in 8-bit. How well this is going to work remains to be seen. As a matter of fact, those Sony hybrids have very good DR, the current models as well. Wouldn't it be technically possible to add an HLG profile via FW update? ;-)

-

Well said. I'll follow this wise advice then, thanks for joining the discussion.

-

Thank you. Right now, there are but three apps on my Mac capable of playing back your clips: FCP X, AAE and VLC. QTX, Kyno and Resolve 14 (free version) say unsupported file type. I will try and import Optimized versions from FCP X to Resolve. Must get my head around this first, following van Hurkmans tips. Then I will also import: 1. Neumanns 10-bit Log footage (sunset) 2. Ordinary S-Log 2 8-bit 3. Pocket RAW ... and finally export a project with a few frames of each in ProResLT to share them. I am the first to agree. I was critical of the benefits higher resolutions (called them myths), I hated HFR in Hobbit, I never liked 3D. But we are rapidly moving away from old viewing habits. I used to say, resolution is not about quality, it's only about size. And what happened within a few years? I am sitting so extremely close to a retina screen that I actually need to wear glasses. HFR says "live" or present tense, I used to say, LFR subconsciously says once upon a time. But HFR swallowes LFR, you can put 24p in 120p, but not the other way around. And 120p can look stunning, hyperrealistic, NOW. I imagine an extreme slomo with 120 fps as the real time basis. It will be sooo smooth. For an action sequence, you can put as much motion blur into it in post as is suitable to enhance momentum. HDR has nothing to do with expanding film language's vocabulary. It's just aesthetically more pleasing. Enough reason for me.

-

Would like to have a snipped in 10-bit HLG from the GH5. The theory above ... Can it be that you have to expose HLG like you would rec_709, that you more or less cramp everything of normal brightness below 100, and the extreme bright parts - some rare reflexes, outlines from backlight, lights shining directly into the lens - get captured at higher values. And in grading that you are supposed to leave those extreme values in the stratosphere, and - more important - leave the brighter midtones there instead of pushing them over 100 to better use the additional headroom. I wrote I can't monitor HDR, but I do my best to come close. Just learned that my 5k iMac has 480 nits, which according to van Hurkman is not too bad at all. Just went for WCG/rec_2020 in FCP and dialed up my LOG footage to +110, where it clipped. Looked really way-y-y better than Standard (rec_709) - but how to export it? I am really curious about the long-awaited FCP upgrade, for which Apple casually mentioned "HDR". If anything, Apple will adopt something if they can make it easy and straightforward. With an iMac, how would I connect an Inferno? So that the output is not being flagged as standard video? There is a very long thread on the Dell UltraSharp UP2718Q (1000 nits) in a german forum and how to make Resolve feed it the correct signal through a Decklink card. 137 postings, but no satisfying, reassuring answer yet.

-

There is an old trick for getting subjectively deeper blacks and therefore a subjectively clearer and sharper preview: backlight. If your monitor is set to 6500K, you put a 6500K light right behind it. The improvement is dramatic. Unfortunately, the viewer might have his TV hanging on a cinnamon colored wall, with some 3200K lamps somewhere to provide ambient light. Because it looked better with our modern furniture, we recently painted the wall behind the (4 year old Samsung-)TV from white to matte black. Night scenes can’t be watched anymore. All of a sudden it had become apparent that the damn thing couldn’t show black, just because the background now is several “stops” darker. Should one buy a GH5 (because of HLG in 10-bit) or an Ursa Mini (raw)? If very good and bright LG or Samsung HDR TVs are the new bottles, how will old wine - the terribly crippled 709 stuff we are now proud of - taste from them?