Vesku

-

Posts

613 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Posts posted by Vesku

-

-

For me 4k 60P vs 30P is bigger improvement than HDR vs SDR because I have already so good, nyanced, bright and vivid colors with my 8bit system than it is hard to think it being even better. Of course true HDR has better image but smooth, sharp and well defined motion is more important to me. I wonder when industry is going to HFR HDR? Who will be the one shooting a movie with 4k 60P HDR?

-

@jonpais Good that we are trying to understand each other and this HDR phenomenon. I dont have HDR TV or monitor. I have 65 inch Panasonic AX800 4k TV which has 98% of DCI-P3 color space and about 500nits brightness. It is not HDR ready so it has no HDR profile or YT HDR feature. It can play 10bit HEVC files from SD card but it cant understand HDR mode. My computer monitor is vivid and bright 27" 2.5k IPS so it has gray blacks but otherwise very good image.

I know what is the difference between true HDR and SDR. I have seen many demos in stores. I am still wondering why many SDR videos today must have 30 years old look with very limited dynamic range even when our displays are so much better today. I think that not many are calibrated their bright TVs to standard 100nits. It is too dim in normal room lightning. Of course industry has standards and they cant grade videos wildly for best TVs but still many videos has unnecessary too contrasty and clipped look. Our eyes/vision is very flexible. In my mind it would be better to keep some highlights in sunsets or bright objects. Our brain can understand the scene without blinding "true" HDR brightness.

HDR is coming and I am going to use it when I get an HDR TV. Meanwhile I am getting very good results with my 8bit SDR system using GH5 12 stops 8bit dynamic range, 0-255 video range and my TVs bright and vivid colors. 4k 60P also helps to make my videos more life-like. 4k 60P 10bit HDR would be nice but my camera is not capable for it (internally).

Sharing these "extreme" videos is difficult. It is fun for our own pleasure but for big audience it is still just coming. There is even no HDR for movie theaters. No video projector can show HDR brightness or HDR colors. Big screen is dim and faint. What is the point of DCI-P3 or rec2020 if the max brightness in movie theater is 50-80nits.

-

SDR vs HDR. Is it necessary to always adjust SDR videos for 6-7 stops dynamic range? If our camera can save 10-12 stops why we must grade SDR video to clip highlights and crush shadows. Not many are using so bad displays. Most TVs and displays can show quite bright whites and good contrast with 8 bit SDR material. In most cases clipping looks worse than keeping than highlights.

I have shooted many years using "HDR"-like profiles and viewing. I shoot with a flat profile (GH3/4/5 Natural contrast -5 + idynamic low). When I watch my videos I set my TV brightness and contrast both to 100 and colors to TVs native largest gamut. I use darker gamma in TV/computer player to compensate flat profile. The result is HDR-like video experience with 8 bit SDR material. My videos look little flat in low contrast weak screens but just OK in good screens.

We watch very high quality photos with good dynamic range and colors in our 8bit screens. Why 8bit videos must look crushed and clipped in the same screens? Why there must be holes in the head and plain white clouds? Why national and commercial TV programs has so low dynamic range? Are the professional cameras bad or is there some rec709 rules to adjust images to look crap?

I think it is possible to have HDR video with 8bit. 10bit is of course better but 8bit is not always so bad. Modern TVs has sophisticated filters to show 8bit video in 10bit panel so that we cant see banding like in "dumb" computer screen.

-

4 hours ago, jonpais said:

Chapman cautioned against using televisions as reference monitors because they each have different curves (not sure if I remember correctly!) and auto-enhancement features to make their picture stand out; and while I've heard several colorists talk about picking up an OLED TV, I've yet to read about anyone's experiences grading on one - if I'm not mistaken, I think they're mostly used to demo work to clients..

A good television shows reference quality colors. Enhancements can be turned off.

A VA-panel TV is not good for close monitoring because viewing angle makes edges to fade. An IPS panel monitor/TV can not show HDR like contrast (gray black). OLED should be the best choice.

-

7 minutes ago, jonpais said:

Dell proudly boasts that the UP2718Q.....an IPS panel......

Not good for HDR. Gray blacks and clouding.

-

Can someone output 10bit HDR video with computer player to 10bit monitor or 10bit HDR TV? How can I use HLG profile with video player (VLC. Potplayer etc.)?

-

-

1 hour ago, zurih said:

Question: is there anyway to change my GX85 from NTSC to PAL? I'd like to record at 25p/50p instead of 30p/60p. Thanks

Try this tutorial:

-

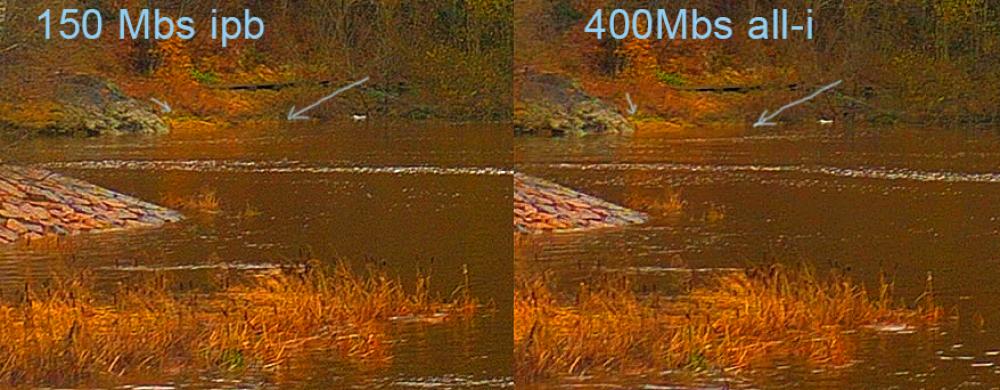

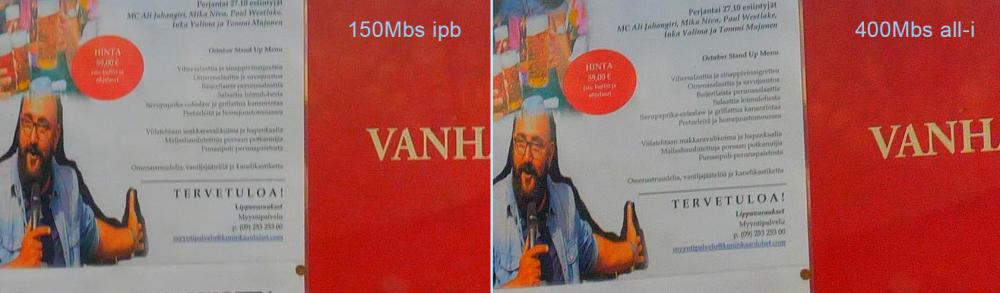

I have shooted tests using 30P 150Mbs ipb vs 400Mbs all-i. In quite static videos the ipb has little less compression faults than all-i. When watching enlargements of backgrounds the ipb vs all-i is like comparing high quality JPG vs medium quality JPG. In Adobe Photoshop JPG quality scale it is about quality 5 vs quality 7 (scale is 1-12) for stopped frame. IPB may cause some little odd things but it is very rare.

In motion the quality difference is backwards. All-i has less macro blocking but ipb is still very good. When making the final delivery version (ipb) the motion macro blocking is always worse than original. In static scene we may see the little compression difference in final rendering if using high enough bitrate.

-

From Alexis Van Hurkman tutorial:

"The basic idea is that the HLG EOTF (electronic-optical transfer function) functions very similarly to BT.1886 from 0 to 0.6 of the signal (with a typical 0 – 1 numeric range), while 0.6 to 1.0 segues into logarithmic encoding for the highlights. This means that, if you just send an HDR Hybrid Log-Gamma signal to an SDR display, you’d be able to see much of the image identically to the way it would appear on an HDR display, and the highlights would be compressed to present what ought to be an acceptable amount of detail for SDR broadcast."

GH5 HLG looks very different than normal SDR profiles in SDR display. It is unusable without grading unlike the theory above.

-

The new IS-lock is great. Surprisingly it improves gimbal-like walking videos. Combined with E-stabilator it makes walking with camera quite smooth.

-

44 minutes ago, jonpais said:

Alexis Van Hurkman writes eloquently about the advantages of HDR, ...... His article entitled HDR, Resolve, and Creative Grading is definitely worth a look.

Thanks, very interesting and deep. One little fact popped to me:

" ...the standard for peak luminance in the movie theater has long been only 48 nits..."

Even SDR videos/movies looks more vivid and colorful at home with at least 100 nits TV and even brighter. HDR in big TV is many many times more life like than a dim film in theater. I was in IMAX theater last weekend and the image was very dim and low contrast. I think it was about 25 nits but the screen was big. There was no true color and no whites. I also watched earlier The Revenance in big 4k theater and the image was very dim in big screen.

-

26 minutes ago, jonpais said:

I'll settle for being able to see a mere nine stops of DR. I can wait until 2025 to see seventeen.

Your LG OLED can show in theory the infinite amount of dynamic range in black room. For example from 0.001 Nits to 700 Nits = contrast ratio 700 000:1. It is about 20 steps of light intensity difference. Another thing is how much the files contains captured dynamic range and how coarse is the reproduction of that brightness scale. 10bit has less banding and smoother gradations than 8bit but the extreme whites and blacks are the same.

So there are at least 3 different factors of dynamic range:

1. captured (scene) dynamic range (GH5 about 12-13 stops, Cine camera 15 stops)

2. Display contrast ratio difference from black to white (LCD about 1:2000 and OLED 1:infinite).

3. Display/file ability to show smooth gradations between black/white and ability to show wide range of colors in all brightness levels (LCD better in whites, OLED better in dark tones).

To show extreme dynamic range we dont need super bright display. We can have better dyn range also by darkening the blackest black levels. It is the difference (contrast ratio) what matters.

-

It has the same IBIS sensor as GH5 5184×3888 resolution, no multi aspect. No multi region 50/60P as in all Panasonic "consumer" models. No 10bit video modes or 400Mbs.

-

-

-

3 minutes ago, jonpais said:

I think nits are a linear measurement, and my current set emits some 690 of the buggers - if it gave off 10,000 nits, that'd be around 15 times the present amount of light - maybe it would fry my eyeballs!

From Dolby test laboratory: https://blog.dolby.com/2013/12/tv-bright-enough/

"...At Dolby, we wanted to find out what the right amount of light was for a display like a television. So we built a super-expensive, super-powerful, liquid-cooled TV that could display incredibly bright images. We brought people in to see our super TV and asked them how bright they liked it.Here’s what we found: 90 percent of the viewers in our study preferred a TV that went as bright as 20,000 nits. (A nit is a measure of brightness. For reference, a 100-watt incandescent lightbulb puts out about 18,000 nits.)..."

On an average sunny day, the illumination of ambient daylight is approximately 30,000 nits. http://www.generaldigital.com/brightness-enhancements-for-displays

-

7 hours ago, Jn- said:

Wolfcrow's latest video, GH5 v2.1 review, see about 13 minutes in where he addresses some of these issues.

He praises 400Mbs but he dont see that 150Mbs ipb is actually better in static scene. It is like high quality JPG vs medium quality JPG. In motion it is backwards.

-

8 minutes ago, Don Kotlos said:

I just noticed that EVA1 is in VBR and I am trying to figure out whether the ALL-I is in VBR. @Vesku can you check the actual bitrate on the static vs moving scenes in both IPB & All-I?

GH5 all-i is pretty constant 400Mbs static or moving. 150Mbs is also quite constant 150Mbs although it is variable about 145-155 Mbs.

I remember GH3 all-i was very variable and seldom reached its maximum.

-

I made many tests today. My final conclusion:

All-i is better with complex motion. A surprise is that ipb is little bit better in static scene. It compresses the important i-frame less. The difference is very small. Motion difference is bigger but still quite marginal.

A static comparison first and moving second (red). I hope this site dont re-compress my images.

-

Good news for GH5 users!

The new IS lock stabilator is incredible. It "glues" the lens in static clips but it helps in other ways too.

Surprisingly the IS lock makes walking with camera very stable and smooth. It makes "floating" stabilization and keeps the frame balanced smoothly. Combined with DUAL IS2 lens and e-stabilization the walking with camera is very smooth and stable. I have used my 14-140 II lens.

-

9 hours ago, maxotics said:

When I look at the HDR videos at BestBuy, on all the fancy TVS, they look a joke to me (all super high contrast).

Yes I have found the same thing. HDR demos in stores shows often normal contrast scenes adjusted very contrasty and colorful. The result is over saturated and eye watering unnatural images. When I go outside I dont see over saturated world. The normal world is actually quite dull and boring color wise. A good HDR system should show high contrast and high color only if the subject has it. Just like a good audio system is not making too much bass or too load sound all the time (Action movies does

) .

) .

It is a difficult question. If the HDR system works right the image should look majority of time normal. Only the high contrast/high color scenes will look different. But it is difficult to sell a new expensive system which looks the same as old majority of time. Film makers must find shocking colors and sunsets to make HDR shine but in normal life there are not so many shining moments.

-

57 minutes ago, jonpais said:

@Vesku I haven't watched the video yet, but the only way to judge ALL- I is by downloading the original files, right? When videos are uploaded to YT, they are no longer intraframe compression...

This video has enlarged 400% crops of ipb vs all-i. We should see the differences even with YT ipb quality if there is any.

It is still silly when many user load examples of their 400Mbs tests using normal YT stream without enlarged or "tortured" examples.

-

Here is GH5 1080P 100Mbs ipb vs 200Mbs all-i test (not mine). Can you see any difference in 400% enlarged examples. Panasonic ipb is very good.

HDR on Youtube - next big thing? Requirements?

In: Cameras

Posted

I just watched some 4k 60P 10bit HDR demos with my non-HDR TV via SD card and they looked stunning. Even when my TV is not HDR ready it shows so large color gamut and brightness that HDR videos looked almost as they should. I used max brightness and contrast with darkest gamma possible so the image was quite OK just little too bright. 10bit is very good, no banding at all with max settings. I think my TV shows HDR demos better than medium class LCD "HDR ready" Tvs because my old top model has larger color gamut and better contrast.

I wonder when photography is going to join 10bit standard. HDR photos would look nice. It is funny that we must render a 10bit video of photos to watch HDR photos.