Leaderboard

Popular Content

Showing content with the highest reputation on 04/25/2024 in all areas

-

I think that if you can possibly manage it, it's best to provide the simplification yourself rather than through external means. This gives you flexibility in the odd example you need it, and doesn't lock you in over time. The basic principle I recommend is to separate R&D activities from production. Specifically, would recommend doing a test on the various ways you can do something, or tackle some problem, and the options for your workflow, evaluate the experience and results, then pick one and then treat it like that's your limitation. I'm about to do one of those cycles again, where I've had a bunch of new information and now need to consolidate it into a workflow that I can just use and get on with it. Similarly, I also recommend doing that with the shooting modes, as has happened here: I find that simple answers come when you understand a topic fully. If your answers to simple questions aren't simple answers then you don't understand things well enough. I call it "the simplicity on the other side of complexity" because you have to work through the complexity to get to the simplicity. In terms of my shooting modes I shoot 8-bit 4K IPB 709 because that's the best mode the GX85 has, and camera size is more important to me than the codec or colour space. If I could choose any mode I wanted I'd be shooting 10-bit (or 12-bit!) 3K ALL-I HLG 200Mbps h264, this is because: 10-bit or 12-bit gives lots of room in post for stretching things around etc and it just "feels nice" 3K because I only edit on a 1080p timeline but having 3K would downscale some of the compression artefacts in post rather than have all the downscaling happening in-camera (and if I zoom in post it gives a bit more extension - mind you you can zoom to about 150% invisibly if you add appropriate levels of sharpening) ALL-I because I want the editing experience to be like butter HLG because I want a LOG profile that is (mostly) supported be colour management so I can easily change exposure and WB in post photometrically without strange tints appearing, and not just a straight LOG profile because I want the shadows and saturation to be stronger in the SOOC files so there is a stronger signal to compression noise ratio 200Mbps h264 because ALL-I files need about double the bitrate compared to IPB, and also I'd prefer h264 because it's easier on the hardware at the moment but h265 would be fine too (remembering that 3K has about half the total pixels at 4K) The philosophy here is basically that capturing the best content comes first, and the best editing experience comes next, then followed by the easiest colour grading experience, then the best image quality after that. This is because the quality of the final edit is impacted by these factors in that order of importance.2 points

-

Color - SOOC vs. LUTs/Grading

SRV1981 reacted to eatstoomuchjam for a topic

That makes total sense. One would expect a professional colorist to groan a bit if handed 8-bit log footage vs 10-bit log (or 12-bit raw). I'd imagine that most want the most flexible image to work with when possible - there's a reason that Hollywood tends to shoot most stuff on Arri and it's not ease of use or portability.1 point -

I had X2, 1 inch 360 and still have One R 360. I did play a bit more with the X4 The good: - 8k is a very visible bump in quality compared to X3 and 1 inch. Still once reframed is not yet at single lens action cam level but is getting closer. Of course, it really depends on how much you zoom in while reframing. It is more detailed and less compression artifacts. Also, in 360 vr the quality bump is very evident. - operations and UI are snappier than previous models (other than stopping the recording that takes a lot, not sure why) - 5.7k at 60 it allows some slow-motion with X3 quality, in previous models the slowmo was just unusable. - one button operation is customizable so you can set the video mode, frame rate, etc... - battery seems to last a lot, even in 8k 30 - App and Studio are great and work as expected. Connecting the camera to the phone is super easy and always works. Why can't Canon copy them? The ok: - lens guards work ok, great way of mounting them, but is not a free lunch, they need to be super clean, and they still create wired flares. I will probably use mine only on really risky situation. But it is good that they are included. - audio seems a tad better - I don't see a big difference in lowlight compared to the 360 1 inch. They are both quite bad but the X4 is not worst imo that is surprising. - single lens mode allows you 4k 60fps but to be honest the quality gain compared to 8k reframed is not big enough, useful if you need 60fps or you don't want to post process. For example, for chest mount I prefer the reframed angle than single lens. - seems to tend to overexpose but you can set the EV so not a big issue. The bad: - no 10bit log - as for all previous models these type of camera scream for sunny bright days. I mostly using them while moving ski, mtb, horses, cars.... and as soon the light is not great stabilization and video quality suffers a lot. So it is a brilliant camera for sunny days only. - is heavier and bigger than the X3 or the One R + 360 module. - the form factor is perfect for selfie stick but not great for helmet mount. - has only a tripod hole, no gopro mount, it should have both. For action, mounting through the tripod hole will make the camera break in case of any impact where normally the gopro mount would give a bit saving the camera. You can use an adapter, but it makes it even taller. - apparently, every time you turn on the camera you need to re-pair the bluetooth mic. Btw why why and why DJI is disabling internal Mic 2 transmitter recording while connected via bluetooth?!? It would be such a clean solution record scratch over bluetooth (no receiver needed) and have the 32bit float on the transmitter. - external audio through receiver + cold shoe mount + usb adapter is a frankenmoster that is really not usable in action environment. All the YT reviver showing this setup as amazing it makes me cry.... Overall is the best 360 camera on the market by far, coming close to single lens action cam quality, below 2k usd. Finally, after years of stagnation, a tangible bump in quality. With the revival of AR/VR they should do a 180 3D model out this.1 point

-

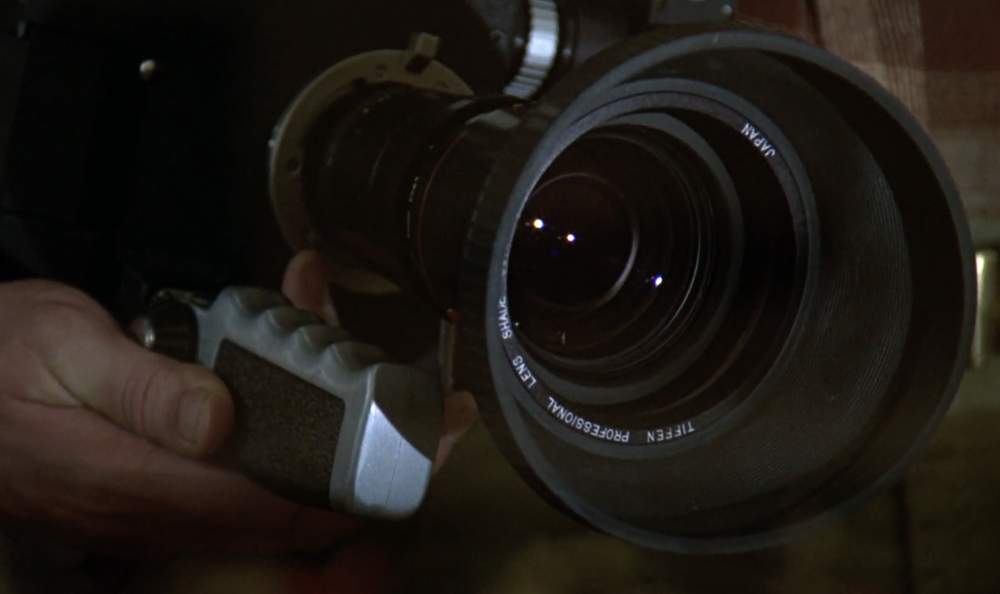

The China Syndrome (1979): What film camera did Micheal Douglas' character use?

ac6000cw reacted to John Matthews for a topic

1 point -

If it was a real working 16mm film camera, I don't think it would be an ENG (Electronic News Gathering) lens, as they are designed for professional portable video cameras (which in the late 1970s would have been triple vacuum tube image sensor cameras using a dichroic colour splitting prism, thus having a long flange-to-sensor optical path). But of course in the movie it's basically a prop, so doesn't have to be a working camera.1 point

-

Tamron is also putting out an RF-S (APS-C) zoom lens (11-20mm F2.8). Makes sense as Canon have barely developed any RF-S lenses (not a single prime or fixed aperture lens). So this will surely help sell crop bodies. So yeah while this is great news for APS-C Canon owners, I really don’t see this as a solid indicator Canon will open third party FF RF lenses although one can hope!1 point

-

It's ok - neither does anyone else!1 point

-

Well that is pretty decent. In that case, I might look even harder at the system next year… The R3 is for my needs the best body currently on the market. A Nikon Z6iii with additional battery grip is probably going to beat it…maybe…for me and as someone now 50% invested in Nikon, almost certainly where I will be going. But at the end of every season, I review my needs and Canon could be an option, especially now that Sigma have joined the party, but would need to have brought out a pretty extensive FF line by next Spring. I think Canon really do need to lighten up on their lens stance though or it will bite them in the arse. If it is not already nibbling…1 point

-

When you say "like they are emitting light themselves" you have absolutely nailed the main problem of the video look. I don't know if you are aware of this, so maybe you're already way ahead of the discussion here, but here's a link to something that explains it way better than I ever could (linked to timestamp): This is why implementing subtractive saturation of some kind in post is a very effective way to reduce the "video look". I have recently been doing a lot of experimenting and a recent experiment I did showed that reducing the brightness of the saturated areas, combined with reducing the saturation of the higher brightness areas (desaturating the highlights) really shifted the image towards a more natural look. For those of us that aren't chasing a strong look, you have to be careful with how much of these you apply because it's very easy to go too far and it starts to seem like you're applying a "look" to the footage. I'm yet to complete my experiments, but I think this might be something I would adjust on a per-shot basis. You'd have to see if you can adjust the Sony to be how you wanted, I'd imagine it would just do a gain adjustment on the linear reading off the sensor and then put it through the same colour profile, so maybe you can compensate for it and maybe not. TBH it's pretty much impossible to evaluate colour science online. This is because: If you look at a bunch of videos online and they all look the same, is this because the camera can only create this look? or is this the default look and no-one knows how to change it? or is this the current trend? If you find a single video and you like it, you can't know if it was just that particular location and time and lighting conditions where the colours were like this, or if the person is a very skilled colourist, or if it involved great looking skin-tones then maybe the person had great skin or great skill in applying makeup, or even if they somehow screwed up the lighting and it actually worked out brilliantly just by accident (in an infinite group of monkeys with typewriters one will eventually type Shakespeare) and the internet is very very much like an infinite group of monkeys with typewriters! The camera might be being used on an incredible number of amazing looking projects, but these people aren't posting to YT. Think about it - there could be 10,000 reality TV shows shot with whatever camera you're looking at and you'd never know that they were shot on that camera because these people aren't all over YT talking about their equipment - they're at work creating solid images and then going home to spend whatever spare time they have with family and friends. The only time we hear about what equipment is being used is if the person is a camera YouTuber, if they're an amateur who is taking 5 years to shoot their film, if they're a professional who doesn't have enough work on to keep them busy, or if the project is so high-level that the crew get interviewed and these questions get asked. There are literally millions of moderately successful TV shows, movies, YouTube channels that look great and there is no information available about what equipment they use. Let's imagine that you find a camera that is capable of great results - this doesn't tell you what kind of results YOU will get with it. Some cameras are just incredibly forgiving and it's easy to get great images from, and there are other cameras that are absolute PIGS to work with, and only the worlds best are able to really make the most of them. For the people in the middle (ie. not a noob and not a god) the forgiving ones will create much nicer images than the pigs, but in the hands of the worlds best, the pig camera might even have more potential. It's hard to tell, but it looks like it might even be 1/2. You have to change the amount when you change the focal length, but I suspect Riza isn't doing that because of how she spoke about the gear. It's also possible to add diffusion in post. Also, lifting the shadows with a softer contrast curve can also have a similar effect.1 point

-

The China Syndrome (1979): What film camera did Micheal Douglas' character use?

John Matthews reacted to SRV1981 for a topic

He’s the only man who doesn’t hate the sound of his own voice 😉1 point -

Actually, what am I blathering about?! The only time I need the EIS is for tracking the couple, outdoors, once, maybe twice. Doh. Absolute none issue. I'll simply put it on a function button! I'd normally be tracking at '42mm' and it will be '58mm'. I'll just increase the distance between myself and the subject by a couple of feet et voila. Move on, nothing to see here 🤪1 point

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

kye reacted to John Matthews for a topic

It's only "Standard" with a 1.09 crop or "High" with a 1.43 crop. There's no adjustment for the behavior, thank god. There are already so many things to adjust on this camera that trying to figure out the perfect stabilization to use on a given shot would be like trying to figure out which picture profile setting on a Sony camera works best- paralysis by analysis. I'm trying to simplify these days as it shouldn't be that hard... I might even go back to iMovie, seriously!1 point -

PicoMic is still smaller and lighter, and the newer Pro version can use a lav mic. The receiver is bigger, but it's also the storage/charging dock for the transmitters, which is handy when you're going from mic'd to not mic'd, changing speakers often, etc. They just need internal recording and they'd be perfect IMO.1 point